Microsoft Forefront TMG and UAG – A feature comparison

Let’s begin |

First of all let’s have a brief description about Forefront TMG and Forefront UAG. |

| Forefront TMG |

Forefront Threat Management Gateway 2010 (TMG) is the successor of ISA Server 2006. For a detailed comparison between ISA Server 2006 and Forefront TMG read the following article. Forefront TMG is a Multilayer Enterprise Firewall with several features:

-

Stateful Packet filtering

-

Application Layer Firewalling

-

HTTP Filter

-

HTTPS Inspection

-

URL Filtering

-

Malware Inspection

-

VPN Server (Client VPN and Site to Site VPN)

-

Web proxy and Web caching Server

-

Forward- and reverse Proxy

-

E-Mail Protection Gateway

-

Intrusion Prevention (IPS) and Intrusion Detection (IDS) system

Forefront TMG is available in two versions: Standard and Enterprise. For an overview about the Forefront TMG editions read the following article.

System requirements for Forefront TMG:

|

Component |

Minimum requirements |

| CPU | 64-bit, 1.86 GHz, 2 core (1 CPU x dual core) processor |

| Memory | 2 GB, 1 GHz RAM |

| Hard Disk | 2.5 GB available space. This is exclusive of the hard disk space required for caching or for temporarily storing files during malware inspection. One local hard disk partition that is formatted with the NTFS file system |

| Network adapters | One network adapter that is compatible with the computer’s operating system, for communication with the Internal network |

| Operating system | Windows Server 2008Version: SP2 or R2 Edition: Standard, Enterprise or Datacenter |

| Windows Roles and Features | These Roles and Features are installed by the Forefront TMG Preparation Tool: Network Policy ServerRouting and Remote Access Services Active Directory Lightweight Directory Services Tools Network Load Balancing Tools Windows PowerShell |

| Other software | Microsoft .NET Framework 3.5 SP1Windows Web Services API

Windows Update Microsoft Windows Installer 4.5 |

Table 1

Forefront UAG

Forefront Unified Access Gateway 2010 (UAG) is the successor of Microsoft IAG (Intelligent Application Gateway) and is designed to control inbound access to corporate resources from several client types such as, Windows, Linux, and Macintosh clients, including mobile devices. One of the major strengths of Forefront UAG is the so called Endpoint access policy which can be used to give clients access to internal resources only when a predefined set of rules, defined by UAG administrators are satisfied. You can think about Forefront UAG Endpoint access Policies as an enhanced version of NAP (Network Access Protection). Forefront UAG enhances the basic Webserver publishing options found in Forefront TMG by integrating a deep understanding of the applications published, the state of health of devices being used to gain access, and the user’s identity.

Forefront UAG provides portal support for gaining access to internal resources. A portal is a website where users can gain access to different published applications like OWA, Remote Desktop connections, SSL VPN, Microsoft CRM, SharePoint and many others.

Forefront UAG supports several authentication providers like Active Directory, Netscape, LDAP, RADIUS, OTP and many more. Another primary development goal of Forefront UAG is remote access via SSL VPN and a technique called DirectAccess.

System requirements for Forefront UAG:

|

Component |

Minimum requirements |

| CPU | 2.66 gigahertz (GHz) or faster processor. Dual core CPU |

| Memory | 4 GB |

| Hard Disk | 2.5 gigabyte (GB) (in addition to Windows requirements) |

| Network adapters | Two network adapters that are compatible with the computer operating system. These network adapters are used for communication with the internal corporate network, and the external network (Internet). Note that deploying Forefront UAG with a single network adapter is not supported |

| Operating system | Forefront UAG can be installed on computers running the Windows Server 2008 R2 Standard or Windows Server 2008 R2 Enterprise 64-bit operating systems. Forefront UAG must be a domain member |

| Windows Roles and Features | Network Policy ServerRouting and Remote Access Services

Active Directory Lightweight Directory Services Tools Message Queuing Services Web Server (IIS) Tools Network Load Balancing Tools Windows PowerShell |

| Other software | Microsoft .NET Framework 3.5 SP1Windows Web Services API

Windows Update Microsoft Windows Installer 4.5 SQL Server Express 2005 Forefront TMG is installed as a firewall during Forefront UAG setup. Following setup, Forefront TMG is configured to protect the Forefront UAG server. The Windows Server 2008 R2 DirectAccess component is automatically installed |

Table 2

Comparing Forefront TMG and Forefront UAG

During my work as a Consultant and Trainer for Forefront products, I noticed that many customers were not completely aware of the main differences between Forefront TMG and UAG and were uncertain which product best fits a given scenario.

I will try to give a short description of each product that helps you take the right decision:

Forefront TMG is the Enterprise Edge Firewall that protects the internal network from the Internet and that provides protected access from internal resources to the Internet. Forefront TMG has powerful publishing features to publish internal services to the Internet such as, Outlook Web Access, Exchange Active Sync and a whole slew of other services, but it is limited in intelligent publishing. It only allows limited control on client devices which should access the internal published resources. In fact, Forefront TMG acts as a Firewall for incoming and outgoing requests.

Forefront UAG is used to extend and enhance the basic publishing features of Forefront UAG, and comes with extended features like portals, SSL VPN (note: Forefront TMG supports SSL VPN in form of SSTP), DirectAccess and powerful Endpoint Access Policies to control the client devices, accessing the Forefront UAG server. During a Forefront UAG installation, Forefront TMG will also be installed but only to protect the Forefront UAG Server. In fact, Forefront UAG acts as an Application Layer Gateway and is the solution for incoming access to internal resources from the Internet.

The following screenshot gives a clear explanation about Forefront TMG and Forefront UAG usage scenarios:

Figure 1: Forefront TMG and Forefront UAG comparison (Source: Microsoft)

Forefront TMG unsupported configurations

advertisement

As with every solution there are supported and unsupported configurations. The unsupported configurations with Forefront TMG are:

-

Forefront TMG is not supported on a 32-bit operating system

– Forefront TMG can only be installed on a 64 Bit Operating system (2008 SP2 and 2008 R2) -

Forefront TMG is not supported on Windows Server 2003

-

Forefront TMG is not supported on all editions of Windows Server 2008

– Installation of Forefront TMG is only supported in Standard, Enterprise and Datacenter Edition and is not supported on Windows Server Core! -

Installing EMS on a Forefront TMG computer is not supported

– EMS is the Enterprise Management Server (formerly known as CSS) -

In-place upgrade from ISA Server 2004/2006 to Forefront TMG is not supported

– You have to export the ISA Server configuration and to import this configuration on a fresh TMG installation - In-place upgrade from Windows Server 2008 SP2 to Windows Server 2008 R2 is not supported

– Forefront TMG does not support upgrading to Windows 2008 R2 while Forefront TMG is installed. -

Forefront TMG installed on a domain controller is not supported, except with Forefront TMG SP1 where the installation of TMG is allowed on a Read Only Domain Controller (RODC)

-

Forefront TMG Client is not supported on Windows 2000

-

Forefront TMG does not support Firewall Client 2000

- Workgroup deployment limitations

– user group authentication only with the use of LDAP (for publishing scenarios) or RADIUS (for in and outgoing access)

– Client certificates cannot be used as primary authentication

– User mapping is not supported (except for PAP and SPAP)

– Group policy deployment of certificates for HTTPS inspection is not available

– Automatic Web proxy detection using Active Directory Auto Discover is not possible. -

Multiple firewalls products

– Installing other firewall products (such as a personal firewall) on a Forefront TMG Server is not supported

Forefront UAG support boundaries

Forefront UAG has some supported and unsupported configurations. The support boundaries are:

Forefront UAG and Forefront UAG DirectAccess

Forefront UAG can be used to publish internal servers via Web portal or directly (similar to Forefront TMG).

Forefront UAG can be used as a DirectAccess Server to extend the DirectAccess functionality which comes with Windows Server 2008 R2. Please, note the following:

-

Forefront UAG can be configured as a publishing Server and as a DirectAccess Server on the same machine.

-

Servers in a Forefront UAG Array can be configured to provide remote access to published servers and as a DirectAcccess server at the same time.

-

It is not possible to use the Network Connector application (a form of VPN) when Forefront UAG is configured as a DirectAccess server.

IPv6 support

In order to support DirectAccess which is IPv6-based, Forefront UAG allows the following IPv6 traffic:

-

Inbound authenticated IPv6 traffic (using IPsec).

-

Native IPv6 traffic from and to the Forefront UAG DirectAccess server.

-

Inbound and outbound IPv6 transition technologies (6to4, Teredo, IP-HTTPS and ISATAP).

No other IPv6 traffic is supported by Forefront UAG.

Forefront TMG running on Forefront UAG

A frequent misunderstanding is the role of Forefront TMG with Forefront UAG. I have spoken with many customers in the past, who wanted to replace their Forefront TMG servers with Forefront UAG to benefit from the Forefront UAG features. But Microsoft has clear statements about supported and unsupported configurations, which are:

- Forefront TMG is installed during a Forefront UAG installation.

- Forefront TMG is installed as a complete product, and is not modified to run on a Forefront UAG server.

-

Forefront UAG uses Forefront TMG, as follows:

-

Forefront TMG acts as a firewall, protecting only the Forefront UAG server.

-

Forefront UAG uses Forefront TMG infrastructure and functionality in some deployment and monitoring scenarios.

-

Changes made through the Forefront UAG console are pushed to the Forefront TMG configuration and only in this way!

It is possible to configure some parts of Forefront TMG through the Forefront TMG Management console (MMC), but the following is not supported:

-

Forefront TMG will be automatically installed during a Forefront UAG installation, a manual Forefront TMG installation is not supported.

-

Forefront UAG must be installed on a clean Windows Server 2008 SP2/R2 machine without Forefront TMG installed.

-

Forefront TMG will be removed if you remove Forefront UAG.

-

A manual uninstallation of Forefront TMG is not supported.

-

Forefront TMG as a forward proxy for outbound Internet access.

-

Forefront TMG as a site-to-site VPN server.

-

Forefront TMG as an intrusion protection system.

-

Publishing Forefront TMG via Forefront UAG.

Supported Forefront TMG configurations

You can use the Forefront TMG Management console (MMC) for the following configurations:

-

Creating access rules to limit access for users, groups, and networks for VPN remote access. These access rules must be placed under the automatically created Firewall policies from Forefront UAG.

-

Monitoring, logging and reporting.

-

Modifying Forefront TMG system policies to enable access from Forefront TMG to internal Servers and to give access from internal Servers to Forefront TMG.

-

Publish Exchange SMTP/SMTPS.

-

Publish Exchange IMAP/IMAPS.

-

Publish Exchange POP3/POP3S.

-

Publish Office Communications Server (OCS) (with the exception of the OCS web access which should be published with Forefront UAG).

Forefront UAG placement

Because of the several limitations you must plan where to implement the Forefront UAG in your network environment. Possible placements are:

-

Forefront UAG in a DMZ (Perimeter) scenario with a Front- and Back firewall in place.

-

Forefront UAG as a parallel placement with your existing Firewall.

You might need to open several Firewall ports for correct communications with Forefront UAG. You will find more information about these deployments here.

Conclusion

In this article, I went through a detailed comparison between Forefront TMG and Forefront UAG features, and discussed the support boundaries of Forefront UAG and unsupported configurations of Forefront TMG. I hope that this article helps you to decide which version is the right one for your deployment.

Restore Database From SQL Server 2008 to SQL Server 2005 Part 1 – 3

PART 1:

Problem

When you restore or attach a database which is created from SQL Server 2008 to SQL Server 2005 or SQL Server 2000, you will see some error messages as the examples below.

Backup and Restore

You have backup a database from SQL Server 2008. If you try to restore the backup database file to SQL Server 2005, you will receive the error message:

An exception occurred while executing a Transact-SQL statement or batch. (Microsoft.SqlServer.ConnectionInfo)

Additional information:

-> The media family on device ‘the backup file‘ is incorrectly formed. SQL Server cannot process this media family.

RESTORE HEADERONLY is terminating abnormally. (Microsoft SQL Server, Error: 3241)

Detach and Attach

You have detach a database from SQL Server 2008. If you try to attach the detached database file to SQL Server 2005, you will receive the error message:

Attach database failed for Server ‘SQL Server name’. (Microsoft.SqlServer.Smo)

Additional information:

-> An exception occurred while executing a Transact-SQL statement batch. (Microsoft.SqlServer.ConnectionInfo)

–> The database ‘database name’ cannot be opened because it is version 655. This server supports version 611 and earlier. A downgrade path is not supported.

Could not open new database ‘database name’. CREATE DATABASE is aborted. (Microsoft SQL Server, Error: 948)

Solution

These problems occur because a backup or detach database file is not backward compatible. You cannot restore or attach a database which is created from a higher version of SQL Server to a lower version of SQL Server.

But there are some alternatives which can help you to restore a database to a lower version of SQL Server. I divide into separate parts.

- Part 2: Generate SQL Server Scripts Wizard. The solution creates a SQL Server script file using a wizard. Then, you simply execute the script file on SQL Server 2005 or SQL Server 2000. So you will get everything as same as the source database on the destination. But there are some disadvantages:

- If the source database contains lots of data, you will have a large script file.

- The generated file is a plain text. Anyone who has access to the file can read it. So you should delete the script file after the restoration.

- Part 3: Import and Export Wizard. This solution exports data to the targeted SQL Server using a wizard. It is more secure and effective than the first solution. But you can only export tables and views only.

————————————————————————————————————————————————————————————————————————————————————————————

PART 2: Generate SQL Server Scripts Wizard

Now let’s see a first solution to solve the problems.

On this post, you see how to backup ‘Northwind’ database by generate a SQL Server script on SQL Server 2008. Then, restore the ‘Northwind’ database by execute the SQL Server script on SQL Server 2005.

Step-by-step

- On Microsoft SQL Server Management Studio, connects to the SQL Server 2008. Right-click on the database that you want to backup and select Tasks -> Generate Scripts.

- On Welcome to the Generate SQL Server Scripts Wizard, click Next.

- On Select Database, select Northwind and check Script all objects in the selected database. Then, click Next.

- On Choose Script Options, set Script Database Create to False and Script for Server Version to SQL Server 2005.

Note: You can set Script Database Create to True if your source and destination for store database files are the same location.

- Continue on Choose Script Options, scroll down and set Script Data to True. Click Next

Note: Set this option to true to include data on each table to a script.

- On Output Option, select a destination for the output script. Select Script to file and browse to the location that you want. Click Next.

- On Script Wizard Summary, you can review your selections. Then, click Finish.

- On Generate Script Progress, the wizard is creating a SQL Server script.

- When the script has been completed, you see the output file as similar the figure below.

- Connect to SQL Server 2005, create a new database. Right-click Database -> New Database.

Note: If you have set Script Database Create to True on step 4, you don’t have to create a database manually.

- Type ‘Northwind’ as database name. Click OK.

- Execute the SQL Server script file that you have created.

- Now the database ‘Northwind’ is restored on SQL Server 2005.

———————————————————————————————————————————————————————————-

PART 3: Export Data Wizard

On this post, you see how to export tables on ‘Northwind’ database from SQL Server 2008 to SQL Server 2005 using export data wizard.

Step-by-step

- On Microsoft SQL Server Management Studio, connects to SQL Server 2008. Right-click on the database that you want to export data -> select Tasks -> Export Data.

- On Welcome to SQL Server Import and Export Wizard, click Next.

- On Choose a Data Source, select the source from which to copy data. Set Data source to SQL Server Native Client 10.0. Verify that Server name is the source of SQL Server 2008 that you want and select Database as ‘Northwind’. Click Next.

- On Choose a Destination, specify where to copy data to. Set Destination to SQL Server Native Client 10.0. Type the Server name to the destination of SQL Server 2005 that you want. You can also click Refresh to verify if you can connect to the specify server name. Currently, I don’t have ‘Northwind’ database on SQL Server 2005 so I will create a new one, click New.

- On Create Database, type name as ‘Northwind’ and click OK.

- Back to Choose a Destination, I have created ‘Northwind’ database so select it as Database. Click Next.

- On Specify Table Copy or Query, select Copy data from one or more tables or views and click Next.

- On Select Sources Tables and Views, select tables that you want to export. On this example, I select all tables on ‘Northwind’ database.

- On Save and Run Package, click Next.

- On Complete the Wizard, you can verify the choices made in the wizard. Then, click Finish.

- Wait until the wizard finishes execution.

- Now I have exported tables of ‘Northwind’ database from SQL Server 2008 to SQL Server 2005 successfully.

How to backup and restore database on Microsoft SQL Server 2005

Step-by-step

Backup a database.

Now I will backup AdventureWorks database on BKKSQL2005 which runs Microsoft SQL Server 2005 to a file.

- Connect to source server. Open Microsoft SQL Server Management Studio and connect to BKKSQL2005.

- Right-click on the AdventureWorks database. Select Tasks -> Backup…

- On Back Up Database window, you can configure about backup information. If you’re not familiar these configurations, you can leave default values. Here are some short descriptions.

- Database – a database that you want to backup.

- Backup type – you can select 2 options: Full and Differential. If this is the first time you backup the database, you must select Full.

- Name – Name of this backup, you can name anything as you want.

- Destination – the file that will be backup to. You can leave as default. Default will backup to “C:\Program Files\Microsoft SQL Server\MSSQL.1\MSSQL\Backup”.

- Click OK to proceed backup.

- Wait for a while and you’ll see a pop-up message when backup is finished.

- Browse to the destination, you’ll see a backup file (.bak format) which you can copy to other server for restore in the next step. Default backup directory is “C:\Program Files\Microsoft SQL Server\MSSQL.1\MSSQL\Backup”.

Restore the database.

Next, I will restore the AdventureWorks database from a file that I’ve created above to BK01BIZ001 which runs Microsoft SQL Server Express Edition.

- Copy the backup file from source server to destination server. I’ve copied into the same directory as source server.

- Connect to destination server. Open Microsoft SQL Server Management Studio Express and connect to BK01BIZ001.

- Right-click on Databases. Select Restore Database…

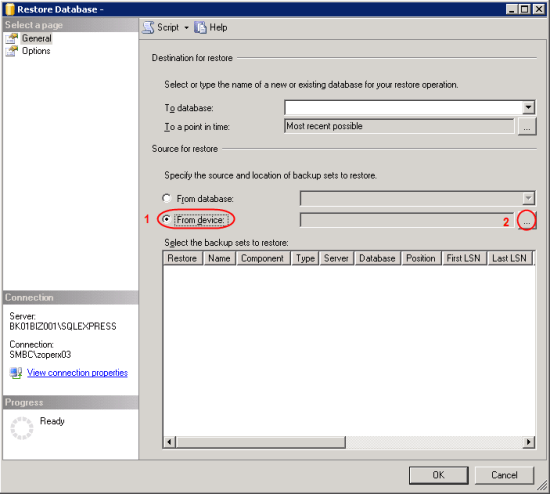

- Restore Database window appears. On Source for restore, select From device and click […] buttton to browse file

- On Specify Backup, ensure that Backup media is “File” and click Add.

- On Locate Backup File, select the backup file. This is the backup file that was created in Backup a database section and was copied to this server. Click OK. OK.

- Back to Restore Database window.

- On Destination for restore, select “AdventureWorks”.

Note: If you haven’t added the backup file on Source before (step 4-6), you won’t see the database name on Destination. - On Source for restore, check the box in front of the backup name (in Restore column).

- Click OK.

- On Destination for restore, select “AdventureWorks”.

- Wait until restore finish and there’ll be a pop-up message notify.

- Now you’ll see the restored database on the destination SQL Server.

Understanding Core Exchange Server 2010 Design Plans

In This Chapter

- Planning for Exchange Server 2010

- Understanding AD Design Concepts for Exchange Server 2010

- Determining Exchange Server 2010 Placement

- Configuring Exchange Server 2010 for Maximum Performance and Reliability

- Securing and Maintaining an Exchange Server 2010 Implementation

The fundamental capabilities of Microsoft Exchange Server 2010 are impressive. Improvements to security, reliability, and scalability enhance an already road-tested and stable Exchange Server platform. Along with these impressive credentials comes an equally impressive design task. Proper design of an Exchange Server 2010 platform will do more than practically anything to reduce headaches and support calls in the future. Many complexities of Exchange Server might seem daunting, but with a full understanding of the fundamental components and improvements, the task of designing the Exchange Server 2010 environment becomes manageable.

This chapter focuses specifically on the Exchange Server 2010 components required for design. Key decision-making factors influencing design are presented and tied into overall strategy. All critical pieces of information required to design Exchange Server 2010 implementations are outlined and explained.

Planning for Exchange Server 2010

Designing Exchange Server used to be a fairly simple task. When an organization needed email and the decision was made to go with Exchange Server, the only real decision to make was how many Exchange servers were needed. Primarily, organizations really needed only email and eschewed any “bells and whistles.”

Exchange Server 2010, on the other hand, takes messaging to a whole new level. No longer do organizations require only an email system, but high level of system availability and resilience and other messaging and unified communications functionality. After the productivity capabilities of an enterprise email platform have been demonstrated, the need for more productivity improvements arises. Consequently, it is wise to understand the integral design components of Exchange Server before beginning a design project.

Outlining Significant Changes in Exchange Server 2010

Exchange Server 2010 is the evolution of a product that has consistently been improving over the years from its roots. Since the Exchange 5.x days, Microsoft has released dramatic improvements with Exchange 2000 Server and later Exchange Server 2003. Microsoft then followed upon the success of Exchange Server 2003 with some major architectural changes with Exchange Server 2007. This latest version, Exchange Server 2010, uses a similar architecture to Exchange Server 2007 but adds, extends, and perfects elements of Exchange Server design.

The major areas of improvement in Exchange Server 2010 include many of the concepts and technologies introduced in Exchange Server 2007 but expand upon them and include additional improvements. Key areas improved upon in Exchange Server 2010 architecture include the following:

- Database Availability Groups (DAGs)—The Exchange Server 2007 concept of Clustered Continuous Replication (CCR) has been greatly improved and replaced with a concept called Database Availability Groups (DAGs), which allow a copy of an Exchange Server mailbox database to exist in up to 16 locations within an Exchange Server organization. Because Continuous Replication is no longer limited to two servers, there is no longer any need for concepts such as Standby Continuous Replication (SCR) or Local Continuous Replication (LCR) because they are all superseded by DAG technology.

- Transport and access improvements—All client access is now funneled through the Client Access server (CAS) role in an organization, which allows for improvements in client access and limited end-user disruption during mailbox moves and maintenance. In addition, Exchange Server 2010 guards against lost emails due to hardware failures by keeping “shadow copies” of mail data on Hub and Edge Transport servers that can be re-sent in the event of loss.

- Integrated archiving capabilities—Exchange Server 2010 provides users and administrators the ability to archive messages for the purpose of cleaning up a mailbox of old messages, as well as for legal reasons for applying a retention policy on key messages. In addition, a second archive mailbox can be associated with a user’s primary mailbox, allowing seamless access to the archived messages from OWA or full Outlook. Users can simply drag and drop messages into their archive folder, or a policy or rule can be set to have messages automatically moved to the archive folder.

- “Access anywhere” improvements—Microsoft has focused a great deal of Exchange Server 2010 development time on new access methods for Exchange Server, including an enhanced Outlook Web App (OWA) that works with a variety of Microsoft and third-party browsers, Microsoft ActiveSync improvements, improved Outlook Voice Access (OVA), unified messaging support, and Outlook Anywhere enhancements. Having these multiple access methods greatly increases the design flexibility of Exchange Server because end users can access email via multiple methods.

- Protection and compliance enhancements—Exchange Server 2010 now includes a variety of antispam, antivirus, and compliance mechanisms to protect the integrity of messaging data. Exchange Server 2010 also includes the capability to establish a second, integrated archive mailbox for users that is made available through all traditional access mechanisms, including OWA. This allows for older archived items to be available to users without the mail actually being stored in the individual’s mailbox, enabling an organization to do better storage management and content management of mail messages throughout the enterprise.

- Admin tools improvements and Exchange PowerShell scripting—Introduced as the primary management tool for Exchange Server 2007, Exchange Server 2010 improves upon PowerShell capabilities and adds additional PowerShell applets and functions. Indeed, the graphical user interface (GUI) itself sits on top of the scripting engine and simply fires scripts based on the task that an administrator chooses in the GUI. This allows for an unprecedented level of control.

It is important to incorporate the concepts of these improvements into any Exchange Server design project because their principles often drive the design process.

Reviewing Exchange Server and Operating System Requirements

Exchange Server 2010 has some specific requirements, both hardware and software, that must be taken into account when designing. These requirements fall into several categories:

- Hardware

- Operating system

- Active Directory

- Exchange Server version

Each requirement must be addressed before Exchange Server 2010 can be deployed.

Reviewing Hardware Requirements

It is important to design Exchange Server hardware to scale out to the user load, which is expected for up to 3 years from the date of implementation. This helps retain the value of the investment put into Exchange Server. Specific hardware configuration advice is offered in later sections of this book.

Reviewing Operating System (OS) Requirements

Exchange Server 2010 is optimized for installation on Windows Server 2008 (Service Pack 2 or later) or Windows Server 2008 R2. The increases in security and the fundamental changes to Internet Information Services (IIS) in Windows Server 2008 provide the basis for many of the improvements in Exchange Server 2010. The specific compatibility matrix, which indicates compatibility between Exchange Server versions and operating systems, is illustrated in Table 3.1.

Table 3.1 Exchange Server Version Compatibility

| Version | Win NT 4.0 | Windows 2000 | Windows 2003 | Windows 2003 R2 | Windows 2008 | Windows 2008 R2 |

| Exchange Server 5.5 | Yes | Yes | No | No | No | No |

| Exchange 2000 Server | No | Yes | No | No | No | No |

| Exchange Server 2003 | No | Yes | Yes | Yes | No | No |

| Exchange Server 2007 | No | No | Yes | Yes | Yes | Yes |

| Exchange Server 2010 | No | No | No | No | Yes | Yes |

*64-bit editions only supported

Understanding Active Directory (AD) Requirements

Exchange Server originally maintained its own directory. With the advent of Exchange 2000 Server, however, the directory for Exchange Server was moved to the Microsoft Active Directory, the enterprise directory system for Windows. This gave greater flexibility and consolidated directories but at the same time increased the complexity and dependencies for Exchange Server. Exchange Server 2010 uses the same model but requires specific AD functional levels and domain controller specifics to run properly.

Exchange Server 2010, while requiring an AD forest in all deployment scenarios, has certain flexibility when it comes to the type of AD it uses. It is possible to deploy Exchange Server in the following scenarios:

- Single forest—The simplest and most traditional design for Exchange Server is one where Exchange Server is installed within the same forest used for user accounts. This design also has the least amount of complexity and synchronization concerns to worry about.

- Resource forest—The Resource forest model in Exchange Server 2010 involves the deployment of a dedicated forest exclusively used for Exchange Server itself, and the only user accounts within it are those that serve as a placeholder for a mailbox. These user accounts are not logged onto by the end users, but rather the end users are given access to them across cross-forest trusts from their particular user forest to the Exchange Server forest. More information n on this deployment model can be found in Chapter 4.

- Multiple forests—Different multiple forest models for Exchange Server are presently available, but they do require a greater degree of administration and synchronization. In these models, different Exchange Server organizations live in different forests across an organization. These different Exchange Server organizations are periodically synchronized to maintain a common Global Address List (GAL). More information on this deployment model can also be found in Chapter 4.

It is important to determine which design model will be chosen before proceeding with an Exchange Server deployment because it is complex and expensive to change the AD structure of Exchange Server after it has been deployed.

Outlining Exchange Server Version Requirements

As with previous versions of Exchange Server, there are separate Enterprise and Standard versions of the Exchange Server 2010 product. The Standard Edition supports all Exchange Server 2010 functionality with the exception of the fact that it is limited to no more than five databases on a single server.

Note – Unlike previous versions of the software, Microsoft provides only a single set of media for Exchange Server 2010. When installed, server version can be set by simply inputting a licensed key. A server can be upgraded from the Trial version to Standard/Enterprise or from Standard to Enterprise. It cannot, however, be downgraded.

Scaling Exchange Server 2010

Exchange 2000 originally provided the basis for servers that could easily scale out to thousands of users in a single site, if necessary. Exchange Server 2003 further improved the situation by introducing Messaging Application Programming Interface (MAPI) compression and RPC over HTTP. Exchange Server 2007 and its 64-bit architecture allowed for even further scalability and reduced IO levels. Finally, Exchange Server 2010 and the separation of client traffic to load-balanced Client Access Servers enable the client tier to be much more scalable than with previous versions.

Site consolidation concepts enable organizations that might have previously deployed Exchange servers in remote locations to have those clients access their mailboxes across wide area network (WAN) links or dial-up connections by using the enhanced Outlook 2007/2010 or OWA clients. This solves the problem that previously existed of having to deploy Exchange servers and global catalog (GC) servers in remote locations, with only a handful of users, and greatly reduces the infrastructure costs of setting up Exchange Server.

Having Exchange Server 2010 Coexist with an Existing Network Infrastructure

In a design scenario, it is necessary to identify any systems that require access to email data or services. For example, it might be necessary to enable a third-party monitoring application to relay mail off the Simple Mail Transfer Protocol (SMTP) engine of Exchange Server so that alerts can be sent. Identifying these needs during the design portion of a project is subsequently important.

Identifying Third-Party Product Functionality

Microsoft built specific hooks into Exchange Server 2010 to enable third-party applications to improve upon the built-in functionality provided by the system. For example, built-in support for antivirus scanning, backups, and unified messaging exist right out of the box, although functionality is limited without the addition of third-party software. The most common additions to Exchange Server implementation are the following:

- Antivirus

- Backup

- Phone/PBX integration

- Fax software

Understanding AD Design Concepts for Exchange Server 2010

After all objectives, dependencies, and requirements have been mapped out, the process of designing the Exchange Server 2010 environment can begin. Decisions should be made in the following key areas:

- AD design

- Exchange server placement

- Global catalog placement

- Client access methods

Understanding the AD Forest

Because Exchange Server 2010 relies on the Windows Server 2008 AD for its directory, it is therefore important to include AD in the design plans. In many situations, an AD implementation, whether based on Windows 2000 Server, Windows Server 2003, or Windows Server 2008, AD already exists in the organization. In these cases, it is necessary only to plan for the inclusion of Exchange Server into the forest.

Note – Exchange Server 2010 has several key requirements for AD. First, all domains and the forest must be at Windows Server 2003 functional levels or higher. Second, it requires that at least one domain controller in each site that includes Exchange Server be at least Windows Server 2003 SP2 or Windows Server 2008.

If an AD structure is not already in place, a new AD forest must be established. Designing the AD forest infrastructure can be complex, and can require nearly as much thought into design as the actual Exchange Server configuration itself. Therefore, it is important to fully understand the concepts behind AD before beginning an Exchange Server 2010 design.

In short, a single “instance” of AD consists of a single AD forest. A forest is composed of AD trees, which are contiguous domain namespaces in the forest. Each tree is composed of one or more domains, as illustrated in Figure 3.1.

Figure 3.1

Multitree forest design.

Certain cases exist for using more than one AD forest in an organization:

- Political limitations—Some organizations have specific political reasons that force the creation of multiple AD forests. For example, if a merged corporate entity requires separate divisions to maintain completely separate information technology (IT) infrastructures, more than one forest is necessary.

- Security concerns—Although the AD domain serves as a de facto security boundary, the “ultimate” security boundary is effectively the forest. In other words, it is possible for user accounts in a domain in a forest to hack into domains within the same forest. Although these types of vulnerabilities are not common and are difficult to do, highly security-conscious organizations should implement separate AD forests.

- Application functionality—A single AD forest shares a common directory schema, which is the underlying structure of the directory and must be unique across the entire forest. In some cases, separate branches of an organization require that certain applications, which need extensions to the schema, be installed. This might not be possible or might conflict with the schema requirements of other branches. These cases might require the creation of a separate forest.

- Exchange-specific functionality (resource forest)—In certain circumstances, it might be necessary to install Exchange Server 2010 into a separate forest, to enable Exchange Server to reside in a separate schema and forest instance. An example of this type of setup is an organization with two existing AD forests that creates a third forest specifically for Exchange Server and uses cross-forest trusts to assign mailbox permissions.

The simplest designs often work the best. The same principle applies to AD design. The designer should start with the assumption that a simple forest and domain structure will work for the environment. However, when factors such as those previously described create constraints, multiple forests can be established to satisfy the requirements of the constraints.

Understanding the AD Domain Structure

After the AD forest structure has been chosen, the domain structure can be laid out. As with the forest structure, it is often wise to consider a single domain model for the Exchange Server 2010 directory. In fact, if deploying Exchange Server is the only consideration, this is often the best choice.

There is one major exception to the single domain model: the placeholder domain model. The placeholder domain model has an isolated domain serving as the root domain in the forest. The user domain, which contains all production user accounts, would be located in a separate domain in the forest, as illustrated in Figure 3.2.

Figure 3.2

The placeholder domain model.

The placeholder domain structure increases security in the forest by segregating high-level schema-access accounts into a completely separate domain from the regular user domain. Access to the placeholder domain can be audited and restricted to maintain tighter control on the critical schema. The downside to this model, however, is the fact that the additional domain requires a separate set of domain controllers, which increases the infrastructure costs of the environment. In general, this makes this domain model less desirable for smaller organizations because the trade-off between increased cost and less security is too great. Larger organizations can consider the increased security provided by this model, however.

Reviewing AD Infrastructure Components

Several key components of AD must be installed within an organization to ensure proper Exchange Server 2010 and AD functionality. In smaller environments, many of these components can be installed on a single machine, but all need to be located within an environment to ensure server functionality.

Outlining the Domain Name System (DNS) Impact on Exchange Server 2010 Design

In addition to being tightly integrated with AD, Exchange Server 2010 is joined with the Domain Name System (DNS). DNS serves as the lookup agent for Exchange Server 2010, AD, and most new Microsoft applications and services. DNS translates common names into computer-recognizable IP addresses. For example, the name http://www.cco.com translates into the IP address of 12.155.166.151. AD and Exchange Server 2010 require that at least one DNS server be made available so that name resolution properly occurs.

Given the dependency that both Exchange Server 2010 and AD have on DNS, it is an extremely important design element. For an in-depth look at DNS and its role in Exchange Server 2010, see Chapter 6, “Understanding Network Services and Active Directory Domain Controller Placement for Exchange Server 2010.”

Reviewing DNS Namespace Considerations for Exchange Server

Given Exchange Server 2010’s dependency on DNS, a common DNS namespace must be chosen for the AD structure to reside in. In multiple tree domain models, this could be composed of several DNS trees, but in small organization environments, this normally means choosing a single DNS namespace for the AD domain.

There is a great deal of confusion between the DNS namespace in which AD resides and the email DNS namespace in which mail is delivered. Although they are often the same, in many cases there are differences between the two namespaces. For example, CompanyABC’s AD structure is composed of a single domain named abc.internal, and the email domain to which mail is delivered is companyabc.com. The separate namespace, in this case, was created to reduce the security vulnerability of maintaining the same DNS namespace both internally and externally (published to the Internet).

For simplicity, CompanyABC could have chosen companyabc.com as its AD namespace. This choice increases the simplicity of the environment by making the AD logon user principal name (UPN) and the email address the same. For example, the user Pete Handley is pete@companyabc.com for logon, and pete@companyabc.com for email. This option is the choice for many organizations because the need for user simplicity often trumps the higher security.

Optimally Locating Global Catalog Servers

Because all Exchange Server directory lookups use AD, it is vital that the essential AD global catalog information is made available to each Exchange server in the organization. For many small offices with a single site, this simply means that it is important to have a full global catalog server available in the main site.

The global catalog is an index of the AD database that contains a partial copy of its contents. All objects within the AD tree are referenced within the global catalog, which enables users to search for objects located in other domains. Every attribute of each object is not replicated to the global catalogs, only those attributes that are commonly used in search operations, such as first name and last name. Exchange Server 2010 uses the global catalog for the email-based lookups of names, email addresses, and other mail-related attributes.

Note – Exchange Server 2010 cannot make use of Windows Server 2008 Read Only Domain Controllers (RODCs) or Read Only Global Catalog (ROGC) servers, so be sure to plan for full GCs and DCs for Exchange Server.

Because full global catalog replication can consume more bandwidth than standard domain controller replication, it is important to design a site structure to reflect the available WAN link capacity. If a sufficient amount of capacity is available, a full global catalog server can be deployed. If, however, capacity is limited, universal group membership caching can be enabled to reduce the bandwidth load.

Understanding Multiple Forests Design Concepts Using Microsoft Forefront Identity Manager (FIM)

Forefront Identity Manager (FIM) enables out-of-the-box replication of objects between two separate AD forests. This concept becomes important for organizations with multiple Exchange Server implementations that want a common Global Address List for the company. Previous iterations of FIM required an in-depth knowledge of scripting to be able to synchronize objects between two forests. FIM, on the other hand, includes built-in scripts that can establish replication between two Exchange Server 2010 AD forests, making integration between forests easier.

Determining Exchange Server 2010 Placement

Previous versions of Exchange Server essentially forced many organizations into deploying servers in sites with greater than a dozen or so users. With the concept of site consolidation in Exchange Server 2010, however, smaller numbers of Exchange servers can service clients in multiple locations, even if they are separated by slow WAN links. For small and medium-sized organizations, this essentially means that a small handful of servers is required, depending on availability needs. Larger organizations require a larger number of Exchange servers, depending on the number of sites and users. In addition, Exchange Server 2010 introduces new server role concepts, which should be understood so that the right server can be deployed in the right location.

Understanding Exchange Server 2010 Server Roles

Exchange Server 2010 firmed up the server role concept outlined with Exchange Server 2007. Before Exchange Server 2007/2010, server functionality was loosely termed, such as referring to an Exchange server as an OWA or front-end server, bridgehead server, or a mailbox or back-end server. In reality, there was no set terminology that was used for Exchange server roles. Exchange Server 2010, on the other hand, distinctly defines specific roles that a server can hold. Multiple roles can reside on a single server, or multiple servers can have the same role. By standardizing on these roles, it becomes easier to design an Exchange Server environment by designating specific roles for servers in specific locations.

The server roles included in Exchange Server 2010 include the following:

- Client access server (CAS)—The CAS role allows for client connections via nonstandard methods such as Outlook Web App (OWA), Exchange ActiveSync, Post Office Protocol 3 (POP3), and Internet Message Access Protocol (IMAP). Exchange Server 2010 also forces MAPI traffic and effectively all client traffic through the CAS layer. CAS servers are the replacement for Exchange 2000/2003 front-end servers and can be load balanced for redundancy purposes. As with the other server roles, the CAS role can coexist with other roles for smaller organizations with a single server, for example.

- Edge Transport server—The Edge Transport server role was introduced with Exchange Server 2007, and consists of a standalone server that typically resides in the demilitarized zone (DMZ) of a firewall. This server filters inbound SMTP mail traffic from the Internet for viruses and spam, and then forwards it to internal Hub Transport servers. Edge Transport servers keep a local AD Application Mode (ADAM) instance that is synchronized with the internal AD structure via a mechanism called EdgeSync. This helps to reduce the surface attack area of Exchange Server. The Edge Transport role can only exist by itself on a server, it cannot be combined with other roles.

- Hub Transport server—The Hub Transport server role acts as a mail bridgehead for mail sent between servers in one AD site and mail sent to other AD sites. There needs to be at least one Hub Transport server within an AD site that contains a server with the mailbox role, but there can also be multiple Hub Transport servers to provide for redundancy and load balancing. HT roles are also responsible for message compliance and rules. The HT role can be combined with other roles on a server, and is often combined with the CAS role.

- Mailbox server—The mailbox server role is intuitive; it acts as the storehouse for mail data in users’ mailboxes and down-level public folders if required. All connections to the mailbox servers are proxied through the CAS servers.

- Unified Messaging server—The Unified Messaging server role allows a user’s Inbox to be used for voice messaging and fax capabilities.

Any or all of these roles can be installed on a single server or on multiple servers. For smaller organizations, a single server holding all Exchange Server roles is sufficient. For larger organizations, a more complex configuration might be required. For more information on designing large and complex Exchange Server implementations, see Chapter 4.

Understanding Environment Sizing Considerations

In some cases with very small organizations, the number of users is small enough to warrant the installation of all AD and Exchange Server 2010 components on a single server. This scenario is possible, as long as all necessary components—DNS, a global catalog domain controller, and Exchange Server 2010—are installed on the same hardware. In general, however, it is best to separate AD and Exchange Server onto separate hardware wherever possible.

Identifying Client Access Points

At its core, Exchange Server 2010 essentially acts as a storehouse for mailbox data. Access to the mail within the mailboxes can take place through multiple means, some of which might be required by specific services or applications in the environment. A good understanding of what these services are and if and how your design should support them is warranted.

Outlining MAPI Client Access with Outlook 2007

The “heavy” client of Outlook, Outlook 2007, has gone through a significant number of changes, both to the look and feel of the application, and to the back-end mail functionality. The look and feel has been streamlined based on Microsoft research and customer feedback. The latest Outlook client, Outlook 2010, uses the Office ribbon introduced with Office 2007 to improve the client experience. Outlook connects with Exchange servers via CAS servers, improving the scalability of the environment.

In addition to MAPI compression, Outlook 2010/2007 expands upon the Outlook 2003 ability to run in cached mode, which automatically detects slow connections between client and server and adjusts Outlook functionality to match the speed of the link. When a slow link is detected, Outlook can be configured to download only email header information. When emails are opened, the entire email is downloaded, including attachments if necessary. This drastically reduces the amount of bits across the wire that is sent because only those emails that are required are sent across the connection.

The Outlook 2010/2007 client is the most effective and full-functioning client for users who are physically located close to an Exchange server. With the enhancements in cached mode functionality, however, Outlook 2010/2007 can also be effectively used in remote locations. When making the decision about which client to deploy as part of a design, you should keep these concepts in mind.

Accessing Exchange Server with Outlook Web App (OWA)

The Outlook Web App (OWA) client in Exchange Server 2010 has been enhanced and optimized for performance and usability. There is now very little difference between the full function client and OWA. With this in mind, OWA is now an even more efficient client for remote access to the Exchange server. The one major piece of functionality that OWA does not have, but the full Outlook 2007 client does, is offline mail access support. If this is required, the full client should be deployed.

Using Exchange ActiveSync (EAS)

Exchange ActiveSync (EAS) support in Exchange Server 2010 allows a mobile client, such as a Pocket PC device or mobile phone, to synchronize with the Exchange server, allowing for access to email from a handheld device. EAS also supports Direct Push technology, which allows for instantaneous email delivery to supported handheld devices such as Windows Mobile 5.0/6.x or other third-party ActiveSync enabled devices.

Understanding the Simple Mail Transport Protocol (SMTP)

The Simple Mail Transfer Protocol (SMTP) is an industry-standard protocol that is widely used across the Internet for mail delivery. SMTP is built in to Exchange servers and is used by Exchange Server systems for relaying mail messages from one system to another, which is similar to the way that mail is relayed across SMTP servers on the Internet. Exchange Server is dependent on SMTP for mail delivery and uses it for internal and external mail access.

By default, Exchange Server 2010 uses DNS to route messages destined for the Internet out of the Exchange Server topology. If, however, a user wants to forward messages to a smarthost before they are transmitted to the Internet, an SMTP connector can be manually set up to enable mail relay out of the Exchange Server system. SMTP connectors also reduce the risk and load on an Exchange server by off-loading the DNS lookup tasks to the SMTP smarthost. SMTP connectors can be specifically designed in an environment for this type of functionality.

Using Outlook Anywhere (Previously Known as RPC over HTTP)

One very effective and improved client access method to Exchange Server 2010 is known as Outlook Anywhere. This technology was previously referred to as RPC over HTTP(s) or Outlook over HTTP(s). This technology enables standard Outlook 2010/2007/2003 access across firewalls. The Outlook client encapsulates Outlook RPC packets into HTTP or HTTPS packets and sends them across standard web ports (80 and 443), where they are then extracted by the Exchange Server 2010 system. This technology enables Outlook to communicate using its standard RPC protocol, but across firewalls and routers that normally do not allow RPC traffic. The potential uses of this protocol are significant because many situations do not require the use of cumbersome VPN clients.

Configuring Exchange Server 2010 for Maximum Performance and Reliability

After decisions have been made about AD design, Exchange server placement, and client access, optimization of the Exchange server itself helps ensure efficiency, reliability, and security for the messaging platform.

Designing an Optimal Operating System Configuration for Exchange Server

As previously mentioned, Exchange Server 2010 only operates on the Windows Server 2008 (Service Pack 2 or later) or Windows Server 2008 R2 operating systems. The enhancements to the operating system, especially in regard to security, make Windows Server 2008 the optimal choice for Exchange Server. The Standard Edition of Windows Server 2008 is sufficient for any Exchange Server installation.

Note – Contrary to popular misconception, the Enterprise Edition of Exchange Server can be installed on the Standard Edition of the operating system, and vice versa. Although there has been a lot of confusion on this concept, both versions of Exchange Server were designed to interoperate with either version of Windows.

Configuring Disk Options for Performance

The single most important design element that improves the efficiency and speed of Exchange Server is the separation of the Exchange Server database and the Exchange Server logs onto a separate hard drive volume. Because of the inherent differences in the type of hard drive operations performed (logs perform primarily write operations, databases primarily read), separating these elements onto separate volumes dramatically increases server performance. Figure 3.3 illustrates some examples of how the database and log volumes can be configured.

Figure 3.3

Database and log volume configuration.

On Server1, the OS and logs are located on the same mirrored C:\ volume and the database is located on a separate RAID-5 drive set. With Server2, the configuration is taken up a notch, with the OS only on C:\, the logs on D:\, and the database on the RAID-5 E:\ volume. Finally, Server3 is configured in the optimal configuration, with separate volumes for each database and a volume for the log files. The more advanced a configuration, the more detailed and complex the drive configuration can get. However, the most important factor that must be remembered is to separate the Exchange Server database from the logs wherever possible.

Note – With the use of Database Availability Groups (DAGs) in Exchange Server 2010, the performance of the disk infrastructure has become less of a concern. DAGs enable an organization’s mailboxes to be spread across multiple servers and to exist in multiple locations (up to 16), which reduces the need for expensive SAN disks and enables Exchange Server to be installed on DAS or SATA disk.

Working with Multiple Exchange Server Databases

Exchange Server 2010 Database Availability Groups (DAGs) allow for multiple databases to be installed across multiple servers and to have multiple versions of those databases in more than one location. This allows for the creation of multiple large databases that reside on cheaper disks, which in turn allows for larger mailbox sizes. It also has the following advantages:

- Reduce database restore time—Multiple databases (rather than a smaller number of larger databases) take less time to restore from tape. This concept can be helpful if there is a group of users who require quicker recovery time (such as management). All mailboxes for this group could then be placed in a separate database to provide quicker recovery time in the event of a server or database failure.

- Provide for separate mailbox limit policies—Each database can be configured with different mailbox storage limits. For example, the standard user database could have a 200-MB limit on mailboxes, and the management database could have a 500-MB limit.

- Mitigate risk by distributing user load—By distributing user load across multiple databases, the risk of losing all user mail connectivity is reduced. For example, if a single database failed that contained all users, no one would be able to mail. If those users were divided across three databases, however, only one third of those users would be unable to mail in the event of a database failure.

Monitoring Design Concepts with System Center Operations Manager 2007 R2

The enhancements to Exchange Server 2010 do not stop with the improvements to the product itself. New functionality has been added to the Exchange Management Pack for System Center Operations Manager that enables OpsMgr to monitor Exchange servers for critical events and performance data. The OpsMgr Management Pack is preconfigured to monitor for Exchange Server-specific information and to enable administrators to proactively monitor Exchange servers. For more information on using OpsMgr to monitor Exchange Server 2010, see Chapter 20, “Using Operations Manager to Monitor Exchange Server 2010.”

Securing and Maintaining an Exchange Server 2010 Implementation

One of the greatest advantages of Exchange Server 2010 is its emphasis on security. Along with Windows Server 2008, Exchange Server 2010 was developed during and after the Microsoft Trustworthy Computing initiative, which effectively put a greater emphasis on security over new features in the products. In Exchange Server 2010, this means that the OS and the application were designed with services “Secure by Default.”

With Secure by Default, all nonessential functionality in Exchange Server must be turned on if needed. This is a complete change from the previous Microsoft model, which had all services, add-ons, and options turned on and running at all times, presenting much larger security vulnerabilities than was necessary. Designing security effectively becomes much easier in Exchange Server 2010 because it now becomes necessary only to identify components to turn on, as opposed to identifying everything that needs to be turned off.

In addition to being secure by default, Exchange Server 2010 server roles are built in to templates used by the Security Configuration Wizard (SCW), which was introduced in Service Pack 1 for Windows Server 2003. Using the SCW against Exchange Server helps to reduce the surface attack area of a server.

Patching the Operating System Using Windows Server Update Services

Although Windows Server 2008 presents a much smaller target for hackers, viruses, and exploits by virtue of the Secure by Default concept, it is still important to keep the OS up to date against critical security patches and updates. Currently, two approaches can be used to automate the installation of server patches. The first method involves configuring the Windows Server 2008 Automatic Updates client to download patches from Microsoft and install them on a schedule. The second option is to set up an internal server to coordinate patch distribution and management. The solution that Microsoft supplies for this functionality is known as Windows Server Update Services (WSUS).

WSUS enables a centralized server to hold copies of OS patches for distribution to clients on a preset schedule. WSUS can be used to automate the distribution of patches to Exchange Server 2010 servers, so that the OS components will remain secure between service packs. WSUS might not be necessary in smaller environments, but can be considered in medium-sized to large organizations that want greater control over their patch management strategy.

Summary

Exchange Server 2010 offers a broad range of functionality and improvements to messaging and is well suited for organizations of any size. With proper thought for the major design topics, a robust and reliable Exchange Server email solution can be put into place that will perfectly complement the needs of any organization.

When Exchange Server design concepts have been fully understood, the task of designing the Exchange Server 2010 infrastructure can take place.

Best Practices

The following are best practices from this chapter:

- Use Database Availability Groups (DAGs) to distribute multiple copies of all mailboxes to multiple locations, taking advantage of HA and DR capabilities that are built into Exchange Server 2010.

- Separate the Exchange Server log and database files onto separate physical volumes whenever possible, but also be cognizant of the fact that Exchange Server can be installed on slower, cheaper disks when using DAGs.

- Plan for a Windows Server 2003 functional forest and at least one Windows Server 2003 SP2 or Windows Server 2008 domain controller in each site that will run Exchange Server.

- Integrate an antivirus and backup strategy into Exchange Server design.

- Keep a local copy of a full global catalog close to any Exchange servers.

- Keep the OS and Exchange Server up to date through service packs and software patches, either manually or via Windows Server Update Services.

- Keep the AD design simple, with a single forest and single domain, unless a specific need exists to create more complexity.

- Identify the client access methods that will be supported and match them with the appropriate Exchange Server 2010 technology.

- Monitor DNS functionality closely in the environment on the AD domain controllers.

New columnstore index feature in SQL Server 2012

Problem

A new feature in SQL Server 2012 is the Columnstore Index which can be used to significantly improve query performance. In this tip we will take a look of how it works and how we can use it.

Solution

There are two types of storage available in the database; RowStore and ColumnStore.

In RowStore, data rows are placed sequentially on a page while in ColumnStore values from a single column, but from multiple rows are stored contiguously. So a ColumnStore Index works using ColumnStore storage.

Now let’s show how we can create a ColumnStore Index and how performance can be improved.

Creating a Column Store Index

Creating a ColumnStore Index is the same as creating a NonClustered Index except we need to add the ColumnStore keyword as shown below.

The syntax of a ColumnStore Index is:

CREATE NONCLUSTERED COLUMNSTORE INDEX ON Table_Name (Column1,Column2,… Column N)

Performance Test

I used the AdventureWorks sample database for performing tests.

--Create the Test Table USE [AdventureWorks2008R2] GO SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[Test_Person]( [BusinessEntityID] [int] NOT NULL, [PersonType] [nchar](2) NOT NULL, [NameStyle] [dbo].[NameStyle] NOT NULL, [Title] [nvarchar](8) NULL, [FirstName] [dbo].[Name] NOT NULL, [MiddleName] [dbo].[Name] NULL, [LastName] [dbo].[Name] NOT NULL, [Suffix] [nvarchar](10) NULL, [EmailPromotion] [int] NOT NULL, [AdditionalContactInfo] [xml](CONTENT [Person].[AdditionalContactInfoSchemaCollection]) NULL, [Demographics] [xml](CONTENT [Person].[IndividualSurveySchemaCollection]) NULL, [rowguid] [uniqueidentifier] ROWGUIDCOL NOT NULL, [ModifiedDate] [datetime] NOT NULL ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO -- We Populated this table with the Data Stored in Table Person.Person. -- As we need Plenty of data so we ran the loop 100 times. INSERT INTO [dbo].[Test_Person] SELECT P1.* FROM Person.Person P1 GO 100 -- At this point we have 1,997,200 rows in the table. -- Create Clustered Index on Coloun [BusinessEntityID] CREATE CLUSTERED INDEX [CL_Test_Person] ON [dbo].[Test_Person] ( [BusinessEntityID]) GO -- Creating Non - CLustered Index on 3 Columns CREATE NONCLUSTERED INDEX [ColumnStore__Test_Person] ON [dbo].[Test_Person] ([FirstName] , [MiddleName],[LastName]) -- Creating Non - CLustered ColumnStore Index on 3 Columns CREATE NONCLUSTERED COLUMNSTORE INDEX [ColumnStore__Test_Person] ON [dbo].[Test_Person] ([FirstName] , [MiddleName],[LastName])

At this point we have created the ColumnStore Index on our test table. Now we will run the SELECT query with and without the ColumnStore Index and analyze performance.

Query Without ColumnStore Index

select [LastName],Count([FirstName]),Count([MiddleName]) from dbo.Test_Person group by [LastName] Order by [LastName] OPTION (IGNORE_NONCLUSTERED_COLUMNSTORE_INDEX)

We have used the OPTION(IGNORE_NONCLUSTERED_COLUMNSTORE_INDEX) query hint to not to use the ColumnStore Index this time.

Query With ColumnStore Index

select [LastName],Count([FirstName]),Count([MiddleName]) from dbo.Test_Person group by [LastName] Order by [LastName]

Here are the Actual Execution Plans for both queries:

We can see the cost when using the NonClustered Index is 59 % while using the ColumnStore index is 13%.

Now if we hover the mouse over the Index Scans we can see details for these operations. The below is a comparison:

It is clear from the results that the query performs extremely fast after creating the ColumnStore Index as the column needed for the query is stored in the same page and the query does not have to go through every single page to read these columns.

Performing INSERT, DELETE or UPDATE Operations

We cannot perform DML ( Insert\ Update \ Delete ) operations on a table having a ColumnStore Index, because this puts the data in a Read Only mode. So one big advantage of using this feature is a Data Warehouse where most operations are read only.

For example, if you perform a DELETE operation on a table with a ColumnStore Index you will get this error:

Msg 35330, Level 15, State 1, Line 1 DELETE statement failed because data cannot be updated in a table with a columnstore index. Consider disabling the columnstore index before issuing the DELETE statement, then rebuilding the columnstore index after DELETE is complete.

However, to perform the operation we would need to disable the ColumnStore Index before issuing the command as shown below:

ALTER INDEX 'Index Name' on 'table name' DISABLE

Creating a ColumnStore Index using Management Studio

Right click and select New Index and select Non-Clustered Columnstore Index…

Click add to add the columns for the index.

After selecting the columns click OK to create the index.

Limitations of a ColumnStore Index

- It cannot have more than 1024 columns.

- It cannot be clustered, only NonClustered ColumnStore indexes are available.

- It cannot be a unique index.

- It cannot be created on a view or indexed view.

- It cannot include a sparse column.

- It cannot act as a primary key or a foreign key.

- It cannot be changed using the ALTER INDEX statement. You have to drop and re-create the ColumnStore index instead. (Note: you can use ALTER INDEX to disable and rebuild a ColumnStore index.)

- It cannot be created with the INCLUDE keyword.

- It cannot include the ASC or DESC keywords for sorting the index.

Simple script to backup all SQL Server databases

Problem

Sometimes things that seem complicated are much easier then you think and this is the power of using T-SQL to take care of repetitive tasks. One of these tasks may be the need to backup all databases on your server. This is not a big deal if you have a handful of databases, but I have seen several servers where there are 100+ databases on the same instance of SQL Server. You could use Enterprise Manager to backup the databases or even use Maintenance Plans, but using T-SQL is a much simpler and faster approach.

Solution

With the use of T-SQL you can generate your backup commands and with the use of cursors you can cursor through all of your databases to back them up one by one. This is a very straight forward process and you only need a handful of commands to do this.

Here is the script that will allow you to backup each database within your instance of SQL Server. You will need to change the @path to the appropriate backup directory and each backup file will take on the name of “DBnameYYYDDMM.BAK”.

DECLARE @name VARCHAR(50) -- database name |

In this script we are bypassing the system databases, but these could easily be included as well. You could also change this into a stored procedure and pass in a database name or if left NULL it backups all databases. Any way you choose to use it, this script gives you the starting point to simply backup all of your databases.

Upgrading SQL Server databases and changing compatibility levels

Problem

When upgrading databases from an older version of SQL Server using either the backup and restore method or detach and attach method the compatibility level does not automatically change and therefore your databases still act as though they are running using an earlier version of SQL Server. From an overall standpoint this is not a major problem, but there are certain features that you will not be able to take advantage of unless your database compatibly level is changed. This tip will show you how to check the current compatibly level, how to change the compatibly level and also some of the differences between earlier versions and SQL Server 2005.

Solution

The first thing that you need to do is to check the compatibility level that your database is running under. As mentioned above any database that is upgraded using the backup and restore or detach and attach method will not change the compatibly level automatically, so you will need to check each database and make the change.

Although SQL Server has changed its naming convention to SQL Server 2000, 2005 and soon to be released 2008 the internal version numbers still remain. Here is a list of the compatibly levels (versions) that you will see:

- 60 = SQL Server 6.0

- 65 = SQL Server 6.5

- 70 = SQL Server 7.0

- 80 = SQL Server 2000

- 90 = SQL Server 2005

Identifying Compatibly Level

To check the compatibility level of your databases you can use one of these methods:

Using SQL Server Management Studio, right click on the database, select “Properties” and look at the “Options” page for each database as the following image shows:

Another option is to use sp_helpdb so you can get the information for all databases at once:

EXEC sp_helpdb

Or select directly from the sys.databases catalog to get the information for all databases.

SELECT * FROM sys.databases

Compatibly Level for New Databases

When issuing a CREATE DATABASE statement there is not a way to select which compatibility level you want to use. The compatibility level that is used is the compatibility level of your model database.

Here is a sample CREATE DATABASE command, but there is not an option to change the compatibility level.

| CREATE DATABASE [test] ON PRIMARY ( NAME = N’test’, FILENAME = N’Z:\SQLData\test.mdf’ , SIZE = 2048KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N’test_log’, FILENAME = N’Y:\SQLData\test3_log.ldf’ , SIZE = 3072KB , FILEGROWTH = 10%) GO |

When creating a database using SQL Server Management Studio you have the ability to change the compatibility level on the “Options” tab such as follows:

If we use the “Script” option we can see that SQL Server issues the CREATE DATABASE statement and then issues “sp_dbcmptlevel” to set the database compatibility level to 80 as shown below.

| CREATE DATABASE [test] ON PRIMARY ( NAME = N’test’, FILENAME = N’Z:\SQLData\test.mdf’ , SIZE = 2048KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N’test_log’, FILENAME = N’Y:\SQLData\test3_log.ldf’ , SIZE = 3072KB , FILEGROWTH = 10%) GO EXEC dbo.sp_dbcmptlevel @dbname=N’test’, @new_cmptlevel=80 GO |

Changing Compatibility Level

So once you have identified the compatibility level of your database and know what you want to change it to you can use the sp_dbcmptlevel system stored procedure to make the change. The command has the following syntax:

| sp_dbcmptlevel [ [ @dbname = ] name ] [ , [ @new_cmptlevel = ] version ]–to change to level 80 dbo.sp_dbcmptlevel @dbname=N’test’, @new_cmptlevel=80 –to change to level 90 –or sp_dbcmptlevel ‘test’, ’90’ |

Differences

There are several differences on how compatibly levels affect your database operations. SQL Server Books Online has a list of these differences and the following list shows you a few of these items:

| Compatibility level setting of 80 or earlier | Compatibility level setting of 90 | Possibility of impact |

|---|---|---|

| For locking hints in the FROM clause, the WITH keyword is always optional. | With some exceptions, table hints are supported in the FROM clause only when the hints are specified with the WITH keyword. For more information, see FROM (Transact-SQL). | High |

| The *= and =* operators for outer join are supported with a warning message. | These operators are not supported; the OUTER JOIN keyword should be used. | High |

| SET XACT_ABORT OFF is allowed inside a trigger. | SET XACT_ABORT OFF is not allowed inside a trigger. | Medium |

(Source: SQL Server 2005 Books Online) For a complete list of these items look here:

In addition, each new compatibility level offers a new list of reserved keywords. Here is a list of the new keywords for SQL Server 2005.

| Compatibility level setting | Reserved keywords |

|---|---|

| 90 | PIVOT, UNPIVOT, REVERT, TABLESAMPLE |

| 80 | COLLATE, FUNCTION, OPENXML |

| 70 | BACKUP, CONTAINS, CONTAINSTABLE, DENY, FREETEXT, FREETEXTTABLE, PERCENT, RESTORE, ROWGUIDCOL, TOP |

| 65 | AUTHORIZATION, CASCADE, CROSS, DISTRIBUTED, ESCAPE, FULL, INNER, JOIN, LEFT, OUTER, PRIVILEGES, RESTRICT, RIGHT, SCHEMA, WORK |

(Source: SQL Server 2005 Books Online)

If one of these keywords is being used and your database is set to this compatibly level the commands will fail. To get around this you could put the keyword in either square brackets ([ ]) or use quotation marks (” “) such as [PIVOT] or “PIVOT”.

Summary

The compatibly level setting is used by SQL Server to determine how certain new features should be handled. This was setup so you could migrate your databases to a later release of SQL Server without having to worry about the application breaking. This setting can be changed forward and backwards if needed, so if you do change your compatibly level and find that there are problems you can set the value back again until you resolve all of the issues that you may be facing during the upgrade.

In addition, there are certain features that only work if the database is set to the latest compatibly level, therefore to get all of the benefits of the version of SQL Server you are running you need to make sure you are using the latest compatibly level.

A guide to recover a database from SUSPECT mode

One of the worst situations I can imagine for a database professional is to get a call reporting a production database is in a “Suspect” state and the business cannot continue. This is a “code red” situation where DBA needs to bring the database online as soon as possible. In this article, I will formulate couple of steps which may be used as a high level process to handle this situation.