Some of my favorite SQL scripts

— SQL Server 2008/2008R2/(2012) Diagnostic Information Queries — Geir Atle Paulsen

— SQL and OS Version information for current instance

SELECT @@VERSION AS [SQL Version Info]; _________________________________________________________________________________________________ — Hardware information from SQL Server 2008 — (Cannot distinguish between HT and multi-core)

SELECT cpu_count AS [Logical CPU Count], hyperthread_ratio AS [Hyperthread Ratio], cpu_count/hyperthread_ratio AS [Physical CPU Count], physical_memory_in_bytes/1048576 AS [Physical Memory (MB)], sqlserver_start_time FROM sys.dm_os_sys_info;

_________________________________________________________________________________________________

— Get sp_configure values for instance

EXEC sp_configure ‘Show Advanced Options’, 1; GO RECONFIGURE; GO EXEC sp_configure;

— Focus on — backup compression default — clr enabled — lightweight pooling (should be zero) — max degree of parallelism — max server memory (MB) — optimize for ad hoc workloads — priority boost (should be zero)

_________________________________________________________________________________________________

— File Names and Paths for all databases in instance

SELECT [dbid], fileid, [filename] FROM sys.sysaltfiles; — Things to look at — Are data files and log files on different drives? — Is everything on C: drive? — Is TempDB on dedicated drives? — Are there multiple data files?

_________________________________________________________________________________________________

— Recovery model, log reuse wait description, and compatibility level for all databases on instance

SELECT [name], recovery_model_desc, log_reuse_wait_desc, [compatibility_level] FROM sys.databases; — Things to look at — How many databases are on the instance? — What recovery models are they using? — What is the log reuse wait description? — What compatibility level are they on? _________________________________________________________________________________________________

— Clear Wait Stats DBCC SQLPERF(‘sys.dm_os_wait_stats’, CLEAR);

_________________________________________________________________________________________________

— Isolate top waits for server instance since last restart or statistics clear

WITH Waits AS (SELECT wait_type, wait_time_ms / 1000. AS wait_time_s, 100. * wait_time_ms / SUM(wait_time_ms) OVER() AS pct, ROW_NUMBER() OVER(ORDER BY wait_time_ms DESC) AS rn FROM sys.dm_os_wait_stats WHERE wait_type NOT IN( ‘SLEEP_TASK’, ‘BROKER_TASK_STOP’, ‘SQLTRACE_BUFFER_FLUSH’, ‘CLR_AUTO_EVENT’, ‘CLR_MANUAL_EVENT’, ‘LAZYWRITER_SLEEP’)) — filter out additional irrelevant waits SELECT W1.wait_type, CAST(W1.wait_time_s AS DECIMAL(12, 2)) AS wait_time_s, CAST(W1.pct AS DECIMAL(12, 2)) AS pct, CAST(SUM(W2.pct) AS DECIMAL(12, 2)) AS running_pct FROM Waits AS W1 INNER JOIN Waits AS W2 ON W2.rn <= W1.rn GROUP BY W1.rn, W1.wait_type, W1.wait_time_s, W1.pct HAVING SUM(W2.pct) – W1.pct < 95; — percentage threshold

_________________________________________________________________________________________________

INFORMATION: Common Significant Wait types with BOL explanations

*** Network Related Waits *** ASYNC_NETWORK_IO Occurs on network writes when the task is blocked behind the network *** Locking Waits *** LCK_M_IX Occurs when a task is waiting to acquire an Intent Exclusive (IX) lock LCK_M_IU Occurs when a task is waiting to acquire an Intent Update (IU) lock LCK_M_S Occurs when a task is waiting to acquire a Shared lock

*** I/O Related Waits *** ASYNC_IO_COMPLETION Occurs when a task is waiting for I/Os to finish IO_COMPLETION Occurs while waiting for I/O operations to complete. This wait type generally represents non-data page I/Os. Data page I/O completion waits appear as PAGEIOLATCH_* waits PAGEIOLATCH_SH Occurs when a task is waiting on a latch for a buffer that is in an I/O request. The latch request is in Shared mode. Long waits may indicate problems with the disk subsystem. PAGEIOLATCH_EX Occurs when a task is waiting on a latch for a buffer that is in an I/O request. The latch request is in Exclusive mode. Long waits may indicate problems with the disk subsystem. WRITELOG Occurs while waiting for a log flush to complete. Common operations that cause log flushes are checkpoints and transaction commits. PAGELATCH_EX Occurs when a task is waiting on a latch for a buffer that is not in an I/O request. The latch request is in Exclusive mode. BACKUPIO Occurs when a backup task is waiting for data, or is waiting for a buffer in which to store data

*** CPU Related Waits *** SOS_SCHEDULER_YIELD Occurs when a task voluntarily yields the scheduler for other tasks to execute. During this wait the task is waiting for its quantum to be renewed. THREADPOOL Occurs when a task is waiting for a worker to run on. This can indicate that the maximum worker setting is too low, or that batch executions are taking unusually long, thus reducing the number of workers available to satisfy other batches. CX_PACKET Occurs when trying to synchronize the query processor exchange iterator You may consider lowering the degree of parallelism if contention on this wait type becomes a problem

_________________________________________________________________________________________________

— Signal Waits for instance

SELECT CAST(100.0 * SUM(signal_wait_time_ms) / SUM (wait_time_ms) AS NUMERIC(20,2)) AS [%signal (cpu) waits], CAST(100.0 * SUM(wait_time_ms – signal_wait_time_ms) / SUM (wait_time_ms) AS NUMERIC(20,2)) AS [%resource waits] FROM sys.dm_os_wait_stats;

— Signal Waits above 10-15% is usually a sign of CPU pressure

_________________________________________________________________________________________________

— Page Life Expectancy (PLE) value for default instance

SELECT cntr_value AS [Page Life Expectancy] FROM sys.dm_os_performance_counters WHERE object_name = ‘SQLServer:Buffer Manager’ — Modify this if you have named instances AND counter_name = ‘Page life expectancy’; INFORMATION: — PLE is a good measurement of memory pressure — Higher PLE is better. Below 300 is generally bad. — Watch the trend, not the absolute value

_________________________________________________________________________________________________

— Buffer Pool Usage for instance

SELECT TOP(20) [type], SUM(single_pages_kb) AS [SPA Mem, Kb] FROM sys.dm_os_memory_clerks GROUP BY type ORDER BY SUM(single_pages_kb) DESC;

INFORMATION: CACHESTORE_SQLCP SQL Plans – These are cached SQL statements or batches that aren’t in stored procedures, functions and triggers CACHESTORE_OBJCP Object Plans – These are compiled plans for stored procedures, functions and triggers CACHESTORE_PHDR Algebrizer Trees – An algebrizer tree is the parsed SQL text that resolves the table and column names

_________________________________________________________________________________________________ — Individual File Sizes and space available for current database

SELECT name AS [File Name] , physical_name AS [Physical Name], size/128 AS [Total Size in MB], size/128.0 – CAST(FILEPROPERTY(name, ‘SpaceUsed’) AS int)/128.0 AS [Available Space In MB] FROM sys.database_files;

INFORMATION: — Look at how large and how full the files are and where they are located — Make sure transaction log is not full!!

_________________________________________________________________________________________________ — Top Cached SPs By Execution Count (SQL 2008)

SELECT TOP(50) p.name AS [SP Name], qs.execution_count, ISNULL(qs.execution_count/DATEDIFF(Second, qs.cached_time, GetDate()), 0) AS [Calls/Second], qs.total_worker_time/qs.execution_count AS [AvgWorkerTime], qs.total_worker_time AS [TotalWorkerTime], qs.total_elapsed_time, qs.total_elapsed_time/qs.execution_count AS [avg_elapsed_time], qs.last_elapsed_time, qs.cached_time FROM sys.procedures AS p INNER JOIN sys.dm_exec_procedure_stats AS qs ON p.object_id = qs.object_id ORDER BY qs.execution_count DESC;

_________________________________________________________________________________________________ — Top Cached SPs By Total Worker time (SQL 2008). Worker time relates to CPU cost

SELECT TOP(25) p.name AS [SP Name], qs.total_worker_time AS [TotalWorkerTime], qs.total_worker_time/qs.execution_count AS [AvgWorkerTime], qs.execution_count, ISNULL(qs.execution_count/DATEDIFF(Second, qs.cached_time, GetDate()), 0) AS [Calls/Second], qs.total_elapsed_time, qs.total_elapsed_time/qs.execution_count AS [avg_elapsed_time], qs.last_elapsed_time, qs.cached_time FROM sys.procedures AS p INNER JOIN sys.dm_exec_procedure_stats AS qs ON p.object_id = qs.object_id ORDER BY qs.total_worker_time DESC;

_________________________________________________________________________________________________ — Top Cached SPs By Total Logical Reads (SQL 2008). Logical reads relate to memory pressure

SELECT TOP(25) p.name AS [SP Name], qs.total_logical_reads AS [TotalLogicalReads], qs.total_logical_reads/qs.execution_count AS [AvgLogicalReads], ISNULL(qs.execution_count/DATEDIFF(Second, qs.cached_time, GetDate()), 0) AS [Calls/Second], qs.total_worker_time AS [TotalWorkerTime], qs.total_worker_time/qs.execution_count AS [AvgWorkerTime], qs.execution_count, qs.total_elapsed_time, qs.total_elapsed_time/qs.execution_count AS [avg_elapsed_time], qs.last_elapsed_time, qs.cached_time FROM sys.procedures AS p INNER JOIN sys.dm_exec_procedure_stats AS qs ON p.object_id = qs.object_id ORDER BY qs.total_logical_reads DESC;

_________________________________________________________________________________________________ — Top Cached SPs By Total Physical Reads (SQL 2008). Physical reads relate to disk I/O pressure

SELECT TOP(25) p.name AS [SP Name], qs.total_physical_reads AS [TotalPhysicalReads], qs.total_physical_reads/qs.execution_count AS [AvgPhysicalReads], ISNULL(qs.execution_count/DATEDIFF(Second, qs.cached_time, GetDate()), 0) AS [Calls/Second], qs.total_logical_reads AS [TotalLogicalReads], qs.total_logical_reads/qs.execution_count AS [AvgLogicalReads], qs.total_worker_time AS [TotalWorkerTime], qs.total_worker_time/qs.execution_count AS [AvgWorkerTime], qs.execution_count, qs.total_elapsed_time, qs.total_elapsed_time/qs.execution_count AS [avg_elapsed_time], qs.last_elapsed_time, qs.cached_time FROM sys.procedures AS p INNER JOIN sys.dm_exec_procedure_stats AS qs ON p.object_id = qs.object_id ORDER BY qs.total_physical_reads DESC;

_________________________________________________________________________________________________ — Top Cached SPs By Total Logical Writes (SQL 2008). Logical writes relate to both memory and disk I/O pressure

SELECT TOP(25) p.name AS [SP Name], qs.total_logical_writes AS [TotalLogicalWrites], qs.total_logical_writes/qs.execution_count AS [AvgLogicalWrites], ISNULL(qs.execution_count/DATEDIFF(Second, qs.cached_time, GetDate()), 0) AS [Calls/Second], qs.total_logical_reads AS [TotalLogicalReads], qs.total_logical_reads/qs.execution_count AS [AvgLogicalReads], qs.total_worker_time AS [TotalWorkerTime], qs.total_worker_time/qs.execution_count AS [AvgWorkerTime], qs.execution_count, qs.total_elapsed_time, qs.total_elapsed_time/qs.execution_count AS [avg_elapsed_time], qs.last_elapsed_time, qs.cached_time FROM sys.procedures AS p INNER JOIN sys.dm_exec_procedure_stats AS qs ON p.object_id = qs.object_id ORDER BY qs.total_logical_writes DESC;

_________________________________________________________________________________________________ — Possible Bad Indexes (writes > reads)

SELECT object_name(s.object_id) AS [Table Name], i.name AS [Index Name], i.index_id, user_updates AS [Total Writes], user_seeks + user_scans + user_lookups AS [Total Reads], user_updates – (user_seeks + user_scans + user_lookups) AS [Difference] FROM sys.dm_db_index_usage_stats AS s WITH (NOLOCK) INNER JOIN sys.indexes AS i WITH (NOLOCK) ON s.object_id = i.object_id AND i.index_id = s.index_id WHERE objectproperty(s.object_id,’IsUserTable’) = 1 AND s.database_id = db_id() AND user_updates > (user_seeks + user_scans + user_lookups) AND i.index_id > 1 ORDER BY [Difference] DESC, [Total Writes] DESC, [Total Reads] ASC;

_________________________________________________________________________________________________ — Missing Indexes for entire instance by Index Advantage

SELECT user_seeks * avg_total_user_cost * (avg_user_impact * 0.01) AS index_advantage, migs.last_user_seek, mid.statement AS [Database.Schema.Table], mid.equality_columns, mid.inequality_columns, mid.included_columns, migs.unique_compiles, migs.user_seeks, migs.avg_total_user_cost, migs.avg_user_impact FROM sys.dm_db_missing_index_group_stats AS migs WITH (NOLOCK) INNER JOIN sys.dm_db_missing_index_groups AS mig WITH (NOLOCK) ON migs.group_handle = mig.index_group_handle INNER JOIN sys.dm_db_missing_index_details AS mid WITH (NOLOCK) ON mig.index_handle = mid.index_handle ORDER BY index_advantage DESC;

INFORMATION: — Look at last user seek time, number of user seeks to help determine source and importance — SQL Server is overly eager to add included columns, so beware

_________________________________________________________________________________________________ — Breaks down buffers used by current database by object (table, index) in the buffer cache

SELECT OBJECT_NAME(p.object_id) AS [ObjectName], p.object_id, p.index_id, COUNT(*)/128 AS [buffer size(MB)], COUNT(*) AS [buffer_count] FROM sys.allocation_units AS a INNER JOIN sys.dm_os_buffer_descriptors AS b ON a.allocation_unit_id = b.allocation_unit_id INNER JOIN sys.partitions AS p ON a.container_id = p.hobt_id WHERE b.database_id = db_id() AND p.object_id > 100 GROUP BY p.object_id, p.index_id ORDER BY buffer_count DESC;

INFORMATION: — Tells you what tables and indexes are using the most memory in the buffer cache

_________________________________________________________________________________________________ — Detect blocking (run multiple times)

SELECT t1.resource_type AS ‘lock type’,db_name(resource_database_id) AS ‘database’, t1.resource_associated_entity_id AS ‘blk object’,t1.request_mode AS ‘lock req’, — lock requested t1.request_session_id AS ‘waiter sid’, t2.wait_duration_ms AS ‘wait time’, — spid of waiter (SELECT [text] FROM sys.dm_exec_requests AS r — get sql for waiter CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) WHERE r.session_id = t1.request_session_id) AS ‘waiter_batch’, (SELECT substring(qt.text,r.statement_start_offset/2, (CASE WHEN r.statement_end_offset = -1 THEN LEN(CONVERT(nvarchar(max), qt.text)) * 2 ELSE r.statement_end_offset END – r.statement_start_offset)/2) FROM sys.dm_exec_requests AS r CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) AS qt WHERE r.session_id = t1.request_session_id) AS ‘waiter_stmt’, — statement blocked t2.blocking_session_id AS ‘blocker sid’, — spid of blocker (SELECT [text] FROM sys.sysprocesses AS p — get sql for blocker CROSS APPLY sys.dm_exec_sql_text(p.sql_handle) WHERE p.spid = t2.blocking_session_id) AS ‘blocker_stmt’ FROM sys.dm_tran_locks AS t1 INNER JOIN sys.dm_os_waiting_tasks AS t2 ON t1.lock_owner_address = t2.resource_address;

_________________________________________________________________________________________________

SQL Server performance – first step

1) System Configuration

SELECT *

FROM sys.configurations

ORDER BY name OPTION (RECOMPILE);

2) Filename and Paths of Database

SELECT DB_NAME([database_id])AS [DBName],

name, physical_name, type_desc, state_desc,

CONVERT( bigint, size/128.0) [SizeinMB]

FROM sys.master_files

ORDER BY DB_NAME([database_id])

3)Capturing Wait Types and Wait Stats Information

— Create Table

CREATE TABLE [MyWaitStatTable](

[wait_type] [nvarchar](60) NOT NULL,

[waiting_tasks_count] [bigint] NOT NULL,

[wait_time_ms] [bigint] NOT NULL,

[max_wait_time_ms] [bigint] NOT NULL,

[signal_wait_time_ms] [bigint] NOT NULL,

[CurrentDateTime] DATETIME NOT NULL,

[Flag] INT

)

GO

— Populate Table at Time 1

INSERT INTO MyWaitStatTable

([wait_type],[waiting_tasks_count],[wait_time_ms],[max_wait_time_ms],[signal_wait_time_ms],

[CurrentDateTime],[Flag])

SELECT [wait_type],[waiting_tasks_count],[wait_time_ms],[max_wait_time_ms],[signal_wait_time_ms],

GETDATE(), 1

FROM sys.dm_os_wait_stats

GO

—– Desired Delay (for one hour) WAITFOR DELAY ’01:00:00′

— Populate Table at Time 2

INSERT INTO MyWaitStatTable

([wait_type],[waiting_tasks_count],[wait_time_ms],[max_wait_time_ms],[signal_wait_time_ms],

[CurrentDateTime],[Flag])

SELECT [wait_type],[waiting_tasks_count],[wait_time_ms],[max_wait_time_ms],[signal_wait_time_ms],

GETDATE(), 2

FROM sys.dm_os_wait_stats

GO

— Check the difference between Time 1 and Time 2

SELECT T1.wait_type, T1.wait_time_ms Original_WaitTime,

T2.wait_time_ms LaterWaitTime,

(T2.wait_time_ms – T1.wait_time_ms) DiffenceWaitTime

FROM MyWaitStatTable T1

INNER JOIN MyWaitStatTable T2 ON T1.wait_type = T2.wait_type

WHERE T2.wait_time_ms > T1.wait_time_ms

AND T1.Flag = 1 AND T2.Flag = 2

ORDER BY DiffenceWaitTime DESC

GO

— Clean up

DROP TABLE MyWaitStatTable

GO

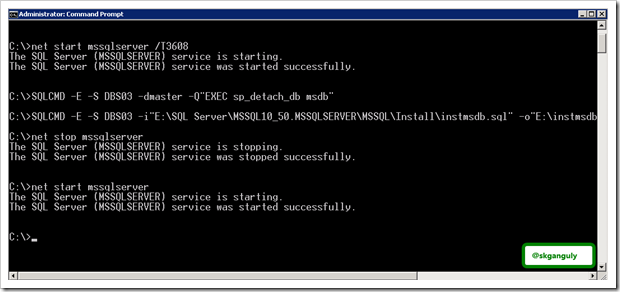

Rebuild MSDB Database

If you accidentally delete the transaction log file of msdb database on a newly installed SQL Server 2008 R2 instance do the following steps

I searched Books Online and found this article about Rebuilding System Databases, which helps in rebuild the msdb database.

Steps to Follows

- Stop all the SQL Server services & start the command prompt with elevated administrative privilege & execute the following command:

NET START MSSQLSERVER /T3608 - Once you start the SQL Server with trace flag 3608, you will be able to detach the msdb database. To do that, execute the following command in SQLCMD mode:

SQLCMD -E -S DBS03 -dmaster -Q"EXEC sp_detach_db msdb" - Rename the msdb data file, and execute the instmsdb.sql file from the install folder, as shown below:

SQLCMD -E -S DBS03 -i"E:\SQL Server\MSSQL10_50.MSSQLSERVER\MSSQL\Install\instmsdb.sql" -o"E:\instmsdb.out" - Review the instmsdb.out for any errors and re-apply the service packs.

- Stop the SQL Server.

- Start the SQL Server normally

Since I was able to connect to the instance without any error, I stopped the SQL Server instance and copy all the system databases files. Later I restarted the SQL Server Agent and the instance was online.

Hope, this may help someone, Happy Learning ![]()

Tracking Login Password Changes in SQL Server

Problem

By default, SQL Server does not keep track of login password changes. When the question initially came up with a user, I thought that perhaps it might be in the default trace or in the system_health extended event session. No such luck. So I was in search of an alternate way to keep track of these events, if not retroactively, at least going forward.

Solution

In a short time you can be up and running with collecting password change information using three different methods: server-side trace, event notifications, and SQL Server audit. Below I will provide an example using each technology. Note that all three examples are able to track password changes using ALTER LOGIN, the system procedure sp_password (deprecated since SQL Server 2005), or the Management Studio Login properties dialog.

Server-Side Trace

Trace includes an event called “Audit Login Change Password Event” – which is much more reliable than capturing all batches and filtering on ‘%sp_password%’ and ‘%ALTER%LOGIN%PASSWORD%’. The EventID is 107, so you can set up a very simple trace with the following code (make sure to set a proper path to the desired trace file):

DECLARE @TraceID INT, @MaxFileSize BIGINT;

SET @MaxFileSize = 5;

EXEC sp_trace_create

@TraceID OUTPUT,

2,

N'C:\Traces\PasswordChangeTrace', -- make sure to change this!

@MaxFileSize,

10;

EXEC sp_trace_setevent @TraceID,107, 1, 1;

EXEC sp_trace_setevent @TraceID,107, 11, 1;

EXEC sp_trace_setevent @TraceID,107, 8, 1;

EXEC sp_trace_setevent @TraceID,107, 12, 1;

EXEC sp_trace_setevent @TraceID,107, 14, 1;

EXEC sp_trace_setevent @TraceID,107, 40, 1;

EXEC sp_trace_setevent @TraceID,107, 42, 1;

EXEC sp_trace_setstatus @TraceID, 1;

SELECT @TraceID;

Make note of the TraceID in the output. Once this has been running, you can use that TraceID to review the events that have been captured using the following query:

DECLARE @path NVARCHAR(255); SELECT @path = [path] FROM sys.traces WHERE id = <traceID from above>; SELECT LoginName = TargetLoginName, EventCount = COUNT(*), FirstEvent = MIN(StartTime), LastEvent = MAX(StartTime) FROM sys.fn_trace_gettable(@path, DEFAULT) WHERE EventClass = 107 -- in case you've added other events GROUP BY TargetLoginName;

Since the above trace definition specifies a max of 10 x 5MB files, eventually an event that happens today will no longer be available through the above query. So as an added exercise you may consider periodically taking a snapshot of this data into a permanent table, and running your queries from there.

Event Notifications

An alternative to trace is to set up a targeted Event Notification. These are lightweight, asynchronous messages sent via Service Broker that can be used to perform various actions in response to a specific event. One such event is AUDIT_LOGIN_CHANGE_PASSWORD_EVENT. In a lot of cases people use these to send an e-mail or start a job, but in this case we’re just going to log to a table. We can create the following table in msdb:

USE [msdb];

GO

CREATE TABLE dbo.PasswordChangeLog

(

LoginName SYSNAME,

EventTime DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP

);

We will then need to set up a queue and a notification to handle our events:

CREATE QUEUE PasswordChangeQueue;

GO

CREATE SERVICE PasswordChangeService ON QUEUE PasswordChangeQueue

([http://schemas.microsoft.com/SQL/Notifications/PostEventNotification]);

GO

CREATE EVENT NOTIFICATION PasswordChangeNotification

ON SERVER WITH FAN_IN

FOR AUDIT_LOGIN_CHANGE_PASSWORD_EVENT

TO SERVICE 'PasswordChangeService', 'current database';

GO

And then the following procedure can be used to log events to our table:

CREATE PROCEDURE dbo.LogPasswordChange

WITH EXECUTE AS OWNER

AS

BEGIN

SET NOCOUNT ON;

DECLARE @message_body XML;

WHILE (1 = 1)

BEGIN

WAITFOR

(

RECEIVE TOP(1) @message_body = message_body

FROM dbo.PasswordChangeQueue

), TIMEOUT 1000;

IF (@@ROWCOUNT = 1)

BEGIN

INSERT dbo.PasswordChangeLog(LoginName)

SELECT @message_body.value('(/EVENT_INSTANCE/LoginName)[1]', 'sysname');

END

END

END

GO

Finally, we can change the queue to call this stored procedure in response to the event:

ALTER QUEUE PasswordChangeQueue WITH ACTIVATION ( STATUS = ON, PROCEDURE_NAME = dbo.LogPasswordChange, MAX_QUEUE_READERS = 1, EXECUTE AS OWNER ); GO

Now change the password for a few logins, and you should see results from the following query:

SELECT LoginName, EventCount = COUNT(*), FirstEvent = MIN(EventTime), LastEvent = MAX(EventTime) FROM dbo.PasswordChangeLog GROUP BY LoginName;

Server Audit

The final option I’ll present here is creating a Server Audit Specification. You may already be using Server Audit, and if so, handling password change auditing using this technology might make more sense than using either of the above two methods. (However note that Server Audit requires Enterprise Edition of SQL Server 2008 or SQL Server 2008 R2 – in SQL Server 2012, this feature has been made available in all editions.)

One of the options for a Server Audit Specification is LOGIN_CHANGE_PASSWORD_GROUP. We can set up a file-based audit to capture these events with the following code (note that this needs to be performed in master and you should update the file path appropriately – you probably don’t want to rely on C:\ for this):

USE [master]; GO CREATE SERVER AUDIT ChangePasswordAudit TO FILE (FILEPATH = 'C:\Audits\', MAXSIZE = 5MB, MAX_ROLLOVER_FILES = 10) WITH (ON_FAILURE = CONTINUE); -- important unless you want your server to halt on failure ALTER SERVER AUDIT ChangePasswordAudit WITH (STATE = ON); CREATE SERVER AUDIT SPECIFICATION ChangePasswordAuditSpecification FOR SERVER AUDIT ChangePasswordAudit ADD (LOGIN_CHANGE_PASSWORD_GROUP) WITH (STATE = ON); GO

Once this is running, you can change a few passwords and then retrieve data from the audit using the following query:

DECLARE @folder VARCHAR(255);

SELECT @folder = log_file_path + '*'

FROM sys.server_file_audits

WHERE name = 'ChangePasswordAudit';

SELECT

LoginName = target_server_principal_name,

EventCount = COUNT(*),

FirstEvent = MIN(event_time),

LastEvent = MAX(event_time)

FROM sys.fn_get_audit_file(@folder, DEFAULT, DEFAULT)

WHERE action_id IN ('PWR', 'PWC') -- PWR = ALTER LOGIN / SSMS, PWC = sp_password

GROUP BY target_server_principal_name;

As with the trace above, this file-based audit is limited to 10 x 5MB files. So you may want to change those options to have the audit data hang around longer, or you may consider occasionally storing the result of this query in a permanent table.

One important thing to note about Server Audit is that it records the event time in UTC, so you might notice that the timestamps are off depending on your time zone. Therefore you may need to look into adding a helper function that will convert any UTC date to your time zone. Since this can get complicated with Daylight Saving Time, I’ve often found it easier to just set up all of our servers to do everything in UTC. 🙂

Conclusion

As you can see, there are a variety of ways to set up tracking for password changes, and each method is relatively straightforward to implement. While it is still impossible to obtain this information from the past, once you have implemented one of the above solutions, you will be able to look back on this information over time.

SSMS Timeout Expired Error When Making Changes To a Table

Problem

I was required to add a new integer type column with an identity property to an existing table through SSMS designer. The operation failed and generated a timeout message. The table was large and I expected it would take some time for this task, but I didn’t think it would have a timeout issue. In this tip we look at how to set this timeout value as well as look at other timeout values that can be set for SQL Server.

Solution

To simulate the timeout error, let’s create a table with 500,000 rows. We will use this for the examples.

-- Create the table for demo

IF EXISTS (SELECT * FROM sys.objects where name = 'TestingTimeOut')

DROP TABLE TestingTimeOut

GO

create table TestingTimeOut (EmpName varchar(75), Designation varchar(50), Department varchar(50))

GO

-- Populate the table with 500000 rows

-- Multiple executions by providing number with GO is not ANSI standard

-- Should not be used in production environment

INSERT INTO TestingTimeOut VALUES ('Atif Shehzad', 'DBA', 'Human Resource')

GO 500000

Transaction timeout for SSMS Designer operates only when SQL Server object modification is performed through the SSMS Designer. It is not a server level parameter and may be viewed or modified in the ‘Designers’ link in the Options menu of SSMS. Its value can be different for each SSMS client and is not stored as a server level configuration.

To check or change this setting, within SSMS go to Tools > Options and click on the Designers link in the left panel as shown below.

The default value is 30 seconds, but for the timeout simulation let’s change it to 1 second and save the changes.

In SSMS, find the table that we just created, right click and select Desgin. Add an integer type column EmpID as a primary key with an identity property and try to save the modifications. Since we changed the timeout to 1 second, this should cause a timeout error as shown below. If the error is not generated you may need to increase the number of rows in the demo table and try again. (Note if you get this error “Saving changes is not permitted” then check out this tip.)

This is the T-SQL that SSMS generates and is trying to execute. We can see here that it is just doing an ALTER TABLE with the ADD option.

/* To prevent any potential data loss issues, you should review this script in detail before running it outside the context of the database designer.*/ BEGIN TRANSACTION SET QUOTED_IDENTIFIER ON SET ARITHABORT ON SET NUMERIC_ROUNDABORT OFF SET CONCAT_NULL_YIELDS_NULL ON SET ANSI_NULLS ON SET ANSI_PADDING ON SET ANSI_WARNINGS ON COMMIT BEGIN TRANSACTION GO ALTER TABLE dbo.TestingTimeOut ADD EmpID int NOT NULL IDENTITY (1, 1) GO ALTER TABLE dbo.TestingTimeOut ADD CONSTRAINT PK_TestingTimeOut PRIMARY KEY CLUSTERED ( EmpID ) WITH( STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] GO ALTER TABLE dbo.TestingTimeOut SET (LOCK_ESCALATION = TABLE) GO COMMIT

If we try to add the EmpID as the first column, as shown below we can see that SSMS generates a pre-save warning that this will take some time. .

This is the T-SQL that SSMS generates and we can see that this is a much more intensive script that drops and recreates the table.

/* To prevent any potential data loss issues, you should review this script in detail before running it outside the context of the database designer.*/

BEGIN TRANSACTION

SET QUOTED_IDENTIFIER ON

SET ARITHABORT ON

SET NUMERIC_ROUNDABORT OFF

SET CONCAT_NULL_YIELDS_NULL ON

SET ANSI_NULLS ON

SET ANSI_PADDING ON

SET ANSI_WARNINGS ON

COMMIT

BEGIN TRANSACTION

GO

CREATE TABLE dbo.Tmp_TestingTimeOut

(

EmpID int NOT NULL IDENTITY (1, 1),

EmpName varchar(75) NULL,

Designation varchar(50) NULL,

Department varchar(50) NULL

) ON [PRIMARY]

GO

ALTER TABLE dbo.Tmp_TestingTimeOut SET (LOCK_ESCALATION = TABLE)

GO

SET IDENTITY_INSERT dbo.Tmp_TestingTimeOut OFF

GO

IF EXISTS(SELECT * FROM dbo.TestingTimeOut)

EXEC('INSERT INTO dbo.Tmp_TestingTimeOut (EmpName, Designation, Department)

SELECT EmpName, Designation, Department FROM dbo.TestingTimeOut WITH (HOLDLOCK TABLOCKX)')

GO

DROP TABLE dbo.TestingTimeOut

GO

EXECUTE sp_rename N'dbo.Tmp_TestingTimeOut', N'TestingTimeOut', 'OBJECT'

GO

ALTER TABLE dbo.TestingTimeOut ADD CONSTRAINT

PK_TestingTimeOut PRIMARY KEY CLUSTERED

(

EmpID

) WITH( STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

GO

COMMIT

Summary

So if you face these timeout issues when using SSMS to make changes you now know the exact parameter to manipulate. The solution to this problem is to increase the timeout limit for Designer. The specified limit would be implemented for any sever connected via that SSMS connection.

SQL Server Database Mirroring Breaks if Endpoint Owner is Deleted

Problem

Will SQL Server database mirroring stop working if the user used to create the endpoints is removed? In this tip I will walk through the steps to find out.

Solution

Before I am able to provide with a definitive answer to the above problem, I created the following test environment.

- A Windows 2008 VM (Virtual Server) named SQLTEST1 with SQL 2008 Developer installed

- A Windows 2008 VM (Virtual Server) named SQLTEST2 with SQL 2008 Developer installed

- Since these servers are not in a domain I created a Windows user WinUser on both VMs and used this account to setup mirroring.

- I created a test database called mytest and mirrored it between the two SQL Servers SQLTEST1 and SQLTEST2.

Check Mirroring Status

Let’s start Database Mirroring Monitor to verify that database mirroring is working. Below we can see that the databases are synchronized.

Drop SQL Server Login

Now, let’s try to drop SQLTEST1\WinUser login by executing the following. As expected, the SQL login cannot be dropped because it owns the Mirroring endpoint. Since this failed I did not bother to try this on SQLTEST2.

Drop Windows User

Now let’s try to drop the Windows user WinUser on both servers. This was successful on both servers.

Check Mirroring Status

Now that WinUser has been deleted on both servers, let’s check if database mirroring is still working. With a bit of surprise, database Mirroring is still working. To further verify it, I created tables and inserted data on the mytest database on the principle server SQLTEST1 and verified that such information was successfully replicated to the mirroring server SQLTEST2.

Restart SQL Server

Now, let’s restart the SQL Server service on both servers.

Check Mirroring Status

If we check the mirroring status again we can see that it has failed.

Conclusion

In order to avoid a possible mirroring outage it is appropriate to verify that a Windows user does not own mirroring endpoints, because if the user is removed from Active Directory or a local group, mirroring will break the next time the server is restarted. If you do use a Windows user you need to make sure that your accounts are well documented and not accidently deleted.

SQL Server 2012 Functions – Lead and Lag

Problem

SQL Server 2012 introduces two new analytical functions, LEAD() and LAG(). In this tip we will be exploring these functions and how to use them.

Solution

These functions access data from a subsequent row (lead) and previous row (lag) in the same result set without the use of a self-join.

The syntax for the Lead and Lag functions is:

LAG|LEAD (scalar_expression [,offset] [,default])

OVER ( [ partition_by_clause ] order_by_clause )

Let me explain using this example which creates table Test_table in database TestDB and inserts some data.

CREATE DATABASE [TestDB]

--Create testable to hold some data

CREATE TABLE [dbo].[Test_table](

[id] [int] IDENTITY(1,1) NOT NULL,

[Department] [nchar](10) NOT NULL,

[Code] [int] NOT NULL,

CONSTRAINT [PK_Test_table] PRIMARY KEY CLUSTERED

(

[id] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON,

ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

--Insert some test data

insert into Test_table values('A',111)

insert into Test_table values('B',29)

insert into Test_table values('C',258)

insert into Test_table values('D',333)

insert into Test_table values('E',15)

insert into Test_table values('F',449)

insert into Test_table values('G',419)

insert into Test_table values('H',555)

insert into Test_table values('I',524)

insert into Test_table values('J',698)

insert into Test_table values('K',715)

insert into Test_table values('L',799)

insert into Test_table values('M',139)

insert into Test_table values('N',219)

insert into Test_table values('O',869)

Our table data will look like this:

Now the query for lead value and lag value will be:

SELECT id,department,Code, LEAD(Code,1) OVER (ORDER BY Code ) LeadValue, LAG(Code,1) OVER (ORDER BY Code ) LagValue FROM test_table

In the above example, for the first row the Lead value is the value of the next row because the offset is set to 1. The Lag value is NULL because there were no previous rows.

Now if we change the Lead offset to 2 and Lag offset to 3 the output will be as follows:

One thing to note is that NULL values appear, because there are not values for the Lag or Lead. To replace NULL values with zero add 0 in Lead\Lag function as shown below.

SELECT id,department,Code, LEAD(Code,2,0) OVER (ORDER BY Code ) LeadValue, LAG(Code,3,0) OVER (ORDER BY Code ) LagValue FFROM test_table

New columnstore index feature in SQL Server 2012

Problem

A new feature in SQL Server 2012 is the Columnstore Index which can be used to significantly improve query performance. In this tip we will take a look of how it works and how we can use it.

Solution

There are two types of storage available in the database; RowStore and ColumnStore.

In RowStore, data rows are placed sequentially on a page while in ColumnStore values from a single column, but from multiple rows are stored contiguously. So a ColumnStore Index works using ColumnStore storage.

Now let’s show how we can create a ColumnStore Index and how performance can be improved.

Creating a Column Store Index

Creating a ColumnStore Index is the same as creating a NonClustered Index except we need to add the ColumnStore keyword as shown below.

The syntax of a ColumnStore Index is:

CREATE NONCLUSTERED COLUMNSTORE INDEX ON Table_Name (Column1,Column2,… Column N)

Performance Test

I used the AdventureWorks sample database for performing tests.

--Create the Test Table USE [AdventureWorks2008R2] GO SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO CREATE TABLE [dbo].[Test_Person]( [BusinessEntityID] [int] NOT NULL, [PersonType] [nchar](2) NOT NULL, [NameStyle] [dbo].[NameStyle] NOT NULL, [Title] [nvarchar](8) NULL, [FirstName] [dbo].[Name] NOT NULL, [MiddleName] [dbo].[Name] NULL, [LastName] [dbo].[Name] NOT NULL, [Suffix] [nvarchar](10) NULL, [EmailPromotion] [int] NOT NULL, [AdditionalContactInfo] [xml](CONTENT [Person].[AdditionalContactInfoSchemaCollection]) NULL, [Demographics] [xml](CONTENT [Person].[IndividualSurveySchemaCollection]) NULL, [rowguid] [uniqueidentifier] ROWGUIDCOL NOT NULL, [ModifiedDate] [datetime] NOT NULL ) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY] GO -- We Populated this table with the Data Stored in Table Person.Person. -- As we need Plenty of data so we ran the loop 100 times. INSERT INTO [dbo].[Test_Person] SELECT P1.* FROM Person.Person P1 GO 100 -- At this point we have 1,997,200 rows in the table. -- Create Clustered Index on Coloun [BusinessEntityID] CREATE CLUSTERED INDEX [CL_Test_Person] ON [dbo].[Test_Person] ( [BusinessEntityID]) GO -- Creating Non - CLustered Index on 3 Columns CREATE NONCLUSTERED INDEX [ColumnStore__Test_Person] ON [dbo].[Test_Person] ([FirstName] , [MiddleName],[LastName]) -- Creating Non - CLustered ColumnStore Index on 3 Columns CREATE NONCLUSTERED COLUMNSTORE INDEX [ColumnStore__Test_Person] ON [dbo].[Test_Person] ([FirstName] , [MiddleName],[LastName])

At this point we have created the ColumnStore Index on our test table. Now we will run the SELECT query with and without the ColumnStore Index and analyze performance.

Query Without ColumnStore Index

select [LastName],Count([FirstName]),Count([MiddleName]) from dbo.Test_Person group by [LastName] Order by [LastName] OPTION (IGNORE_NONCLUSTERED_COLUMNSTORE_INDEX)

We have used the OPTION(IGNORE_NONCLUSTERED_COLUMNSTORE_INDEX) query hint to not to use the ColumnStore Index this time.

Query With ColumnStore Index

select [LastName],Count([FirstName]),Count([MiddleName]) from dbo.Test_Person group by [LastName] Order by [LastName]

Here are the Actual Execution Plans for both queries:

We can see the cost when using the NonClustered Index is 59 % while using the ColumnStore index is 13%.

Now if we hover the mouse over the Index Scans we can see details for these operations. The below is a comparison:

It is clear from the results that the query performs extremely fast after creating the ColumnStore Index as the column needed for the query is stored in the same page and the query does not have to go through every single page to read these columns.

Performing INSERT, DELETE or UPDATE Operations

We cannot perform DML ( Insert\ Update \ Delete ) operations on a table having a ColumnStore Index, because this puts the data in a Read Only mode. So one big advantage of using this feature is a Data Warehouse where most operations are read only.

For example, if you perform a DELETE operation on a table with a ColumnStore Index you will get this error:

Msg 35330, Level 15, State 1, Line 1 DELETE statement failed because data cannot be updated in a table with a columnstore index. Consider disabling the columnstore index before issuing the DELETE statement, then rebuilding the columnstore index after DELETE is complete.

However, to perform the operation we would need to disable the ColumnStore Index before issuing the command as shown below:

ALTER INDEX 'Index Name' on 'table name' DISABLE

Creating a ColumnStore Index using Management Studio

Right click and select New Index and select Non-Clustered Columnstore Index…

Click add to add the columns for the index.

After selecting the columns click OK to create the index.

Limitations of a ColumnStore Index

- It cannot have more than 1024 columns.

- It cannot be clustered, only NonClustered ColumnStore indexes are available.

- It cannot be a unique index.

- It cannot be created on a view or indexed view.

- It cannot include a sparse column.

- It cannot act as a primary key or a foreign key.

- It cannot be changed using the ALTER INDEX statement. You have to drop and re-create the ColumnStore index instead. (Note: you can use ALTER INDEX to disable and rebuild a ColumnStore index.)

- It cannot be created with the INCLUDE keyword.

- It cannot include the ASC or DESC keywords for sorting the index.

Simple script to backup all SQL Server databases

Problem

Sometimes things that seem complicated are much easier then you think and this is the power of using T-SQL to take care of repetitive tasks. One of these tasks may be the need to backup all databases on your server. This is not a big deal if you have a handful of databases, but I have seen several servers where there are 100+ databases on the same instance of SQL Server. You could use Enterprise Manager to backup the databases or even use Maintenance Plans, but using T-SQL is a much simpler and faster approach.

Solution

With the use of T-SQL you can generate your backup commands and with the use of cursors you can cursor through all of your databases to back them up one by one. This is a very straight forward process and you only need a handful of commands to do this.

Here is the script that will allow you to backup each database within your instance of SQL Server. You will need to change the @path to the appropriate backup directory and each backup file will take on the name of “DBnameYYYDDMM.BAK”.

DECLARE @name VARCHAR(50) -- database name |

In this script we are bypassing the system databases, but these could easily be included as well. You could also change this into a stored procedure and pass in a database name or if left NULL it backups all databases. Any way you choose to use it, this script gives you the starting point to simply backup all of your databases.

Upgrading SQL Server databases and changing compatibility levels

Problem

When upgrading databases from an older version of SQL Server using either the backup and restore method or detach and attach method the compatibility level does not automatically change and therefore your databases still act as though they are running using an earlier version of SQL Server. From an overall standpoint this is not a major problem, but there are certain features that you will not be able to take advantage of unless your database compatibly level is changed. This tip will show you how to check the current compatibly level, how to change the compatibly level and also some of the differences between earlier versions and SQL Server 2005.

Solution

The first thing that you need to do is to check the compatibility level that your database is running under. As mentioned above any database that is upgraded using the backup and restore or detach and attach method will not change the compatibly level automatically, so you will need to check each database and make the change.

Although SQL Server has changed its naming convention to SQL Server 2000, 2005 and soon to be released 2008 the internal version numbers still remain. Here is a list of the compatibly levels (versions) that you will see:

- 60 = SQL Server 6.0

- 65 = SQL Server 6.5

- 70 = SQL Server 7.0

- 80 = SQL Server 2000

- 90 = SQL Server 2005

Identifying Compatibly Level

To check the compatibility level of your databases you can use one of these methods:

Using SQL Server Management Studio, right click on the database, select “Properties” and look at the “Options” page for each database as the following image shows:

Another option is to use sp_helpdb so you can get the information for all databases at once:

EXEC sp_helpdb

Or select directly from the sys.databases catalog to get the information for all databases.

SELECT * FROM sys.databases

Compatibly Level for New Databases

When issuing a CREATE DATABASE statement there is not a way to select which compatibility level you want to use. The compatibility level that is used is the compatibility level of your model database.

Here is a sample CREATE DATABASE command, but there is not an option to change the compatibility level.

| CREATE DATABASE [test] ON PRIMARY ( NAME = N’test’, FILENAME = N’Z:\SQLData\test.mdf’ , SIZE = 2048KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N’test_log’, FILENAME = N’Y:\SQLData\test3_log.ldf’ , SIZE = 3072KB , FILEGROWTH = 10%) GO |

When creating a database using SQL Server Management Studio you have the ability to change the compatibility level on the “Options” tab such as follows:

If we use the “Script” option we can see that SQL Server issues the CREATE DATABASE statement and then issues “sp_dbcmptlevel” to set the database compatibility level to 80 as shown below.

| CREATE DATABASE [test] ON PRIMARY ( NAME = N’test’, FILENAME = N’Z:\SQLData\test.mdf’ , SIZE = 2048KB , FILEGROWTH = 1024KB ) LOG ON ( NAME = N’test_log’, FILENAME = N’Y:\SQLData\test3_log.ldf’ , SIZE = 3072KB , FILEGROWTH = 10%) GO EXEC dbo.sp_dbcmptlevel @dbname=N’test’, @new_cmptlevel=80 GO |

Changing Compatibility Level

So once you have identified the compatibility level of your database and know what you want to change it to you can use the sp_dbcmptlevel system stored procedure to make the change. The command has the following syntax:

| sp_dbcmptlevel [ [ @dbname = ] name ] [ , [ @new_cmptlevel = ] version ]–to change to level 80 dbo.sp_dbcmptlevel @dbname=N’test’, @new_cmptlevel=80 –to change to level 90 –or sp_dbcmptlevel ‘test’, ’90’ |

Differences

There are several differences on how compatibly levels affect your database operations. SQL Server Books Online has a list of these differences and the following list shows you a few of these items:

| Compatibility level setting of 80 or earlier | Compatibility level setting of 90 | Possibility of impact |

|---|---|---|

| For locking hints in the FROM clause, the WITH keyword is always optional. | With some exceptions, table hints are supported in the FROM clause only when the hints are specified with the WITH keyword. For more information, see FROM (Transact-SQL). | High |

| The *= and =* operators for outer join are supported with a warning message. | These operators are not supported; the OUTER JOIN keyword should be used. | High |

| SET XACT_ABORT OFF is allowed inside a trigger. | SET XACT_ABORT OFF is not allowed inside a trigger. | Medium |

(Source: SQL Server 2005 Books Online) For a complete list of these items look here:

In addition, each new compatibility level offers a new list of reserved keywords. Here is a list of the new keywords for SQL Server 2005.

| Compatibility level setting | Reserved keywords |

|---|---|

| 90 | PIVOT, UNPIVOT, REVERT, TABLESAMPLE |

| 80 | COLLATE, FUNCTION, OPENXML |

| 70 | BACKUP, CONTAINS, CONTAINSTABLE, DENY, FREETEXT, FREETEXTTABLE, PERCENT, RESTORE, ROWGUIDCOL, TOP |

| 65 | AUTHORIZATION, CASCADE, CROSS, DISTRIBUTED, ESCAPE, FULL, INNER, JOIN, LEFT, OUTER, PRIVILEGES, RESTRICT, RIGHT, SCHEMA, WORK |

(Source: SQL Server 2005 Books Online)

If one of these keywords is being used and your database is set to this compatibly level the commands will fail. To get around this you could put the keyword in either square brackets ([ ]) or use quotation marks (” “) such as [PIVOT] or “PIVOT”.

Summary

The compatibly level setting is used by SQL Server to determine how certain new features should be handled. This was setup so you could migrate your databases to a later release of SQL Server without having to worry about the application breaking. This setting can be changed forward and backwards if needed, so if you do change your compatibly level and find that there are problems you can set the value back again until you resolve all of the issues that you may be facing during the upgrade.

In addition, there are certain features that only work if the database is set to the latest compatibly level, therefore to get all of the benefits of the version of SQL Server you are running you need to make sure you are using the latest compatibly level.

-

Archives

- June 2013 (2)

- December 2012 (4)

- November 2012 (1)

- August 2012 (8)

- July 2012 (1)

- June 2012 (3)

- May 2012 (14)

- April 2012 (8)

- March 2012 (7)

- February 2012 (4)

- January 2012 (9)

- December 2011 (6)

-

Categories

-

RSS

Entries RSS

Comments RSS